10 Game-Changing Software Deployment Best Practices for 2026

Master our top 10 software deployment best practices. From CI/CD to Canary, learn actionable tips to ship code faster, safer, and with zero downtime.

Remember the good ol' days? When deploying software meant a frantic all-nighter, fueled by stale coffee and prayer, hoping you didn't just brick the entire system? Yeah, me neither, that sounds awful. In a world where speed and stability are non-negotiable, slow, risky deployments are a one-way ticket to obscurity. The difference between market leaders and the competition often boils down to one critical capability: how efficiently and safely they can get new features into the hands of their users.

This isn't just about pushing code; it's a strategic advantage that impacts everything from user satisfaction to your bottom line. Bad deployments erode trust, burn out your engineers, and create a culture of fear around shipping new things. Good deployments, on the other hand, build momentum and create a positive feedback loop, allowing for rapid iteration and innovation. Think of it as the engine of your development lifecycle - a clunky, unreliable one will stall your progress, while a finely tuned one propels you forward.

We’re here to give you the blueprint for that high-performance engine. This guide cuts through the noise and dives straight into the most crucial software deployment best practices that modern engineering teams rely on. From bulletproof automation pipelines and clever rollout strategies to robust monitoring and infrastructure management, we're going to break down 10 actionable principles that separate the pros from the... well, the people still ordering late-night panic pizza. Get ready for practical insights that will transform your deployment process from a source of anxiety into a well-oiled, predictable, and even boring routine. And in the world of deployments, boring is a very, very good thing.

1. Automated Deployment Pipelines (CI/CD)

Gone are the days of manual, white-knuckle deployments that required a whole team, a six-pack of energy drinks, and a prayer. Today, one of the most crucial software deployment best practices is leaning on automation through CI/CD (Continuous Integration/Continuous Deployment) pipelines. Think of it as an automated assembly line for your code: it checks in new code, runs tests, builds the software, and deploys it without anyone needing to click a "deploy now" button at 3 AM.

This approach is a game-changer for complex platforms like Zemith, where multiple AI models and third-party tools are constantly being updated. A CI/CD pipeline ensures that an update to our document assistant tool doesn't accidentally break the coding copilot. By automating the entire process, you drastically reduce human error, speed up release cycles, and let developers focus on building cool stuff instead of managing deployments. To fully grasp the power of automated software delivery, understanding the core concepts is essential. You can dive deeper with this excellent guide on What is a CI/CD Pipeline.

How to Get Started

- Start Small with Automated Testing: Before you automate everything, focus on building a robust suite of automated tests. This is your safety net. If you can't trust your tests, you'll never trust your pipeline.

- Leverage Modern Tools: Tools like GitHub Actions, GitLab CI/CD, and Jenkins make setting up pipelines easier than ever. For instance, a GitHub Actions workflow could be configured to automatically test and deploy a new version of Zemith’s AI-powered summarizer every time code is merged into the main branch.

- Integrate with Your Infrastructure: A solid CI/CD pipeline often works hand-in-hand with Infrastructure as Code (IaC) to provision testing and staging environments automatically, ensuring consistency from development to production.

- Establish Strong Foundations: A reliable CI/CD pipeline relies heavily on clean code and disciplined development habits. To learn more about this foundational element, check out our guide on version control best practices.

2. Blue-Green Deployments

Imagine trying to change a tire on a car while it's still speeding down the highway. Sounds impossible, right? That’s what deploying major updates to a live application can feel like. Blue-Green deployment is one of the most effective software deployment best practices for avoiding this high-stakes scenario. It involves running two identical production environments: "Blue" (the current live version) and "Green" (the new version). Once the Green environment is ready and fully tested, you simply switch the router to direct all user traffic from Blue to Green. Easy peasy.

This strategy is a lifesaver for platforms like Zemith, where uptime is non-negotiable. If we're rolling out a significant update to our AI-powered coding copilot, we can deploy it to the Green environment without affecting users interacting with the live Blue environment. If something goes wrong after the switch, rolling back is as simple as pointing the router back to the Blue environment, which is still running the old, stable version. It's the ultimate "undo" button for deployments, ensuring zero downtime and a massive reduction in release-day anxiety. This approach provides a seamless transition and a powerful safety net. For a deeper dive, Netflix’s tech blog offers great insights into how they implement this strategy.

How to Get Started

- Automate the Switch: The traffic switch should be an automated, one-click (or no-click) process. Manual switching is a recipe for mistakes. Use load balancers or DNS routing tools to handle this seamlessly.

- Implement Robust Health Checks: Before flipping the switch, your Green environment needs a full physical. Run a comprehensive suite of automated tests and health checks to confirm it’s 100% ready for prime time. At Zemith, we verify that our AI models in the Green environment are responding with the same accuracy and speed as in Blue.

- Manage Database Schema Changes Carefully: Databases are often the trickiest part of a Blue-Green deployment. Plan for backward compatibility so both the Blue and Green versions can work with the same database schema during the transition.

- Keep Environments Identical: The Blue and Green environments should be as identical as possible, from infrastructure to configuration. Any differences introduce risk. This consistency is key to a reliable deployment process.

3. Canary Deployments

Imagine releasing a brand-new feature and holding your breath, hoping nothing explodes. Canary deployments offer a better way, letting you exhale. The idea is simple: instead of pushing a new version to everyone at once, you release it to a small, controlled group of users first, the "canaries in the coal mine." If they start chirping (or, you know, reporting bugs), you know to pull back before it affects your entire user base.

This technique is invaluable for a platform like Zemith, where a new AI model's performance can be unpredictable. We might roll out an enhanced image generation model to just 5% of our creative professional users. By monitoring their experience closely, we can catch performance issues or unexpected outputs with minimal impact. This approach to software deployment best practices turns a high-stakes gamble into a calculated, data-driven decision, ensuring that by the time a feature reaches everyone, it’s already been battle-tested in the real world.

How to Get Started

- Define Success Before You Start: What does a "good" deployment look like? Before releasing to your canary group, establish clear success metrics. This could be anything from error rates and API latency to user engagement with the new feature. Without these, you're just guessing.

- Segment Your Canaries Intelligently: Don't just pick users at random. Segment your canary group based on relevant criteria. For instance, Uber might test a new algorithm in a single city, while Zemith could test a new coding assistant feature with users who have opted into a beta program.

- Monitor Like a Hawk: Real-time monitoring is non-negotiable. Use tools like Prometheus or DataDog to track your predefined success metrics. If key indicators like error rates or response times cross a dangerous threshold, you need to know immediately.

- Automate Rollbacks: The best canary setups include automated rollbacks. If your monitoring tools detect that a critical metric has gone south, the system should automatically revert the canary group to the stable version, no human intervention required. This is your ultimate safety net.

4. Infrastructure as Code (IaC)

Imagine trying to build the exact same elaborate Lego castle twice, just from memory. You'd probably miss a few pieces. That's what manual infrastructure management is like. Infrastructure as Code (IaC) flips the script by treating your infrastructure (servers, databases, networks) like software. You define it in code, check it into version control, and deploy it automatically, ensuring a perfect, repeatable replica every single time. No more "it worked on my machine" excuses for your entire server fleet.

For a complex, distributed platform like Zemith, this isn't just a nice-to-have; it's a necessity. IaC allows us to programmatically manage the GPU instances needed for our AI image generation models and ensure environment parity between a developer's laptop and our global production clusters. When infrastructure is code, you get consistency, speed, and a clear audit trail for every change, making it a cornerstone of modern software deployment best practices. For those looking to deepen their understanding, this guide on 10 essential Infrastructure as Code best practices offers further valuable insights into implementing IaC effectively.

How to Get Started

- Version Control Everything: The first rule of IaC is to treat your infrastructure code like application code. Store it in Git. This gives you history, collaboration, and the ability to review changes before they're applied.

- Pick Your Tool: Powerful tools like Terraform, AWS CloudFormation, or Ansible are the workhorses of IaC. At Zemith, we might use a Terraform script to spin up a new, perfectly configured environment for testing updates to our AI coding copilot in minutes.

- Start with a Single Service: Don't try to boil the ocean. Begin by codifying a small, non-critical piece of your infrastructure. Once you’re comfortable, expand from there.

- Document Your Code: Your infrastructure code defines your entire setup, making it critical to maintain. Good comments and clear documentation are essential for long-term success. To level up your documentation skills, explore our guide on code documentation best practices.

5. Containerization and Orchestration

If your application feels like a delicate Jenga tower where touching one piece could bring the whole thing crashing down, it's time to talk about containers. Containerization, powered by tools like Docker, bundles an application and all its dependencies (libraries, settings, system tools) into a single, neat package. This package, or container, runs consistently anywhere, from a developer's laptop to a massive production server. It’s the ultimate "it works on my machine" killer.

This practice is non-negotiable for complex platforms. At Zemith, we containerize each AI model integration, like Claude and GPT, as separate services. If the image generation service gets a surge in traffic, orchestration tools like Kubernetes can scale just that one container up without touching the document assistant or coding copilot. This isolates failures, optimizes resource usage, and makes our entire software deployment process more resilient and predictable.

How to Get Started

- Build Lean, Mean Docker Images: Don't just stuff everything into a container. Use multi-stage builds to create minimal, production-ready images that are smaller, faster, and more secure. A smaller image means a quicker deployment.

- Implement Health Checks: Your orchestration system (like Kubernetes) needs to know if your application is healthy. Implement health check endpoints (

/healthz,/readyz) in your containers so the orchestrator can automatically restart failing instances or route traffic away from them. - Manage Resources Wisely: Set CPU and memory requests and limits for your containers. This prevents a single resource-hungry container from becoming a "noisy neighbor" and hogging all the server resources, ensuring stable performance for all services.

- Isolate and Scale: Containerization pairs beautifully with a microservices-based design. For a deeper dive into this powerful combination, explore our guide on microservices architecture patterns to see how you can build independently scalable services.

6. Feature Flags and Progressive Rollouts

Deploying a major feature can feel like a high-stakes bet, where you push your changes live and hope for the best. Feature flags, also known as feature toggles, offer a much safer way to play the game. They are essentially 'if' statements in your code that let you turn features on or off for specific users without needing to redeploy anything. This practice allows for progressive rollouts, where you gradually release a feature to a small percentage of your user base, monitor its impact, and slowly expand its audience.

For a complex platform like Zemith, this is a non-negotiable part of our software deployment best practices. Imagine we've developed a new AI model for summarizing dense research papers. Instead of unleashing it on everyone at once, we can use a feature flag to enable it for just 5% of our power users. We can then gather feedback, fix bugs, and ensure the model performs as expected before rolling it out to 25%, 50%, and eventually, 100% of our users. This method de-risks deployment by separating the act of deploying code from the act of releasing a feature.

How to Get Started

- Centralize Flag Management: Don't hardcode flags directly into your application logic. Use a dedicated feature flag management service like LaunchDarkly or an internal configuration system. This allows non-technical team members to control feature releases.

- Establish Clear Naming Conventions: A flag named

newThingyis a recipe for disaster. Create a clear naming convention that includes the feature name, creation date, and intended audience (e.g.,ai-summary-model-v2-beta-users-2024-q3). - Plan for Cleanup: Feature flags are not meant to live forever. They add complexity to your codebase. Implement a process for regularly reviewing and removing old flags once a feature is fully rolled out or abandoned. This technical debt cleanup is crucial.

- Tie Flags to Business Metrics: Use flags for more than just on/off switches. They are powerful tools for A/B testing and experimentation. For Zemith, we could tie a new UI flag to metrics like user engagement or task completion time to get quantitative data on its effectiveness.

7. Monitoring, Logging, and Observability

Deploying your code is only half the battle; the real fun begins when you have to figure out what it's doing in the wild. Pushing code without visibility is like flying a plane blindfolded. That’s where observability comes in, a trifecta of metrics, logs, and traces that gives you deep insight into your system's health and performance. It's not just about knowing if something is broken, but why it's broken.

For a complex platform like Zemith, with its web of interconnected AI models and services, this practice is non-negotiable. Observability lets us see if a latency spike in our Claude integration is impacting the user experience of our document assistant or if a new image generation model is throwing unexpected errors. By adopting observability as a core part of our software deployment best practices, we can spot and fix issues before our users even notice them, ensuring a smooth, reliable platform.

How to Get Started

- Instrument Everything: Start by adding structured logging and metrics to your application from day one. Use correlation IDs to trace a single user request as it hops across multiple services, making it easier to pinpoint bottlenecks.

- Embrace Distributed Tracing: For complex workflows, like a Zemith user asking the coding copilot to analyze a document, distributed tracing tools (like OpenTelemetry) are essential. They visualize the entire journey of a request, showing you exactly where time is being spent.

- Set Up Meaningful Dashboards: Create dashboards tailored to different audiences. Developers might need granular performance metrics for a specific service, while executives need a high-level view of overall system health and user engagement.

- Establish and Monitor SLOs: Define Service Level Objectives (SLOs) for your critical user journeys. This helps you quantify reliability and use error budgets to make data-driven decisions about when to build new features versus when to focus on stability. For a deeper dive into troubleshooting with this data, explore our guide on how to debug code.

8. Automated Testing, Test Coverage, and Security Scanning

Deploying without a comprehensive automated testing and security strategy is like trying to cross a busy highway blindfolded. Sure, you might make it, but the odds are not in your favor. This practice involves running a suite of automated tests (unit, integration, end-to-end) and security scans to catch bugs and vulnerabilities before they wreak havoc in production. It’s the ultimate quality gatekeeper in your deployment process.

For a platform like Zemith, this is non-negotiable. Our automated tests ensure that a new update to our AI research assistant doesn't break the document summarizer, while security scans prevent vulnerabilities like hardcoded API keys from ever seeing the light of day. By integrating these checks directly into the CI/CD pipeline, you build a safety net that validates every single change. This is a cornerstone of modern software deployment best practices, ensuring reliability and trustworthiness.

How to Get Started

- Follow the Testing Pyramid: Build a solid foundation with lots of fast unit tests, a healthy layer of integration tests (e.g., ensuring our Claude API calls work as expected), and a few critical end-to-end tests that mimic real user journeys. This structure maximizes coverage without slowing down your pipeline.

- Integrate Security Scanning Early: Don't wait until the last minute to think about security. Use tools like GitHub Advanced Security or Snyk to scan for vulnerable dependencies and code issues with every commit. The earlier you find a problem, the cheaper and easier it is to fix.

- Mock External Dependencies: When testing integrations with third-party services, like the AI models Zemith uses, mock their APIs. This keeps your tests fast, reliable, and independent of external systems that might be slow or unavailable.

- Aim for Meaningful Coverage: Don't just chase a high code coverage percentage. Focus on testing critical paths, error conditions, and edge cases. It's better to have 70% coverage of important logic than 95% coverage that misses the tricky parts.

9. Database Migration and Versioning

Let's talk about the heart of your application: the database. Deploying new code is one thing, but changing your database schema without causing a digital earthquake is a whole other level of tricky. That’s where database migration and versioning comes in. This practice treats your database schema changes just like application code: they are scripted, version-controlled, and applied systematically across all environments. No more manual ALTER TABLE commands whispered into a production terminal at midnight.

This process is absolutely vital for a platform like Zemith, which is constantly evolving. When we roll out a new project organization feature, the database needs a new schema to support it. By scripting these changes, we ensure that the update is applied identically in development, staging, and production, which is a cornerstone of reliable software deployment best practices. It prevents the dreaded "it works on my machine" scenario and protects against data loss or corruption during an update. Think of it as a repeatable, auditable recipe for evolving your data structure safely.

How to Get Started

- Treat Migrations as Code: Use a dedicated migration tool like Flyway, Liquibase, or the built-in systems in frameworks like Django and Ruby on Rails. Check your migration scripts into version control right alongside your application code.

- Write Reversible Migrations: Your best friend during a botched deployment is a backward migration. Always write a script to undo the change you’re making. This gives you a one-click rollback plan if things go sideways, saving you from a frantic data recovery mission.

- Decouple from Code Deployments: Use feature flags to deploy database schema changes before the application code that uses them. This allows you to apply and test the migration in a low-risk window without impacting users. Once confirmed, you can flip the feature flag to activate the new code.

- Design for Evolution: A well-structured database is easier to migrate. Building your schema on solid principles makes future changes less painful and risky. To brush up on this, you can learn more about our approach in this guide to database design principles.

10. Graceful Degradation and Fallback Strategies

Even the best-laid deployment plans can hit a snag. A third-party API goes down, a microservice gets overloaded, or a cosmic ray flips a bit in your server rack (okay, maybe not that last one). A key software deployment best practice is to plan for these failures with graceful degradation. Instead of letting a single failed component bring your entire application crashing down, the system should intelligently offer a partial, yet still useful, experience.

This approach is vital for multi-faceted platforms like Zemith. If an external research API becomes unresponsive, our AI assistant shouldn't just throw an error. Instead, it can fall back to serving recent, cached results, ensuring users still get value while the issue is resolved. This resilience model, popularized by tech giants like Netflix and Amazon, turns a potential outage into a minor hiccup. By designing systems that fail gracefully, you build user trust and maintain core functionality even when things aren't perfect.

How to Get Started

- Implement Circuit Breakers: For any external service call, use a circuit breaker pattern. If an API starts failing repeatedly, the breaker "trips" and stops sending requests for a short period, allowing it to recover and preventing your app from getting stuck.

- Establish a Caching Layer: Maintain a cache of recent AI model outputs or frequently accessed data. For instance, Zemith's document assistant could cache summaries of recently analyzed files, providing an instant fallback if the live summarization model is under heavy load.

- Test Your Fallback Paths: Don't just assume your graceful degradation works. Regularly test these fallback scenarios as rigorously as you test your primary user flows. If you don't test it, it's already broken.

- Communicate Transparently: When a feature is in a degraded state, let the user know. A simple, non-intrusive banner like, "We're showing you recent results as live data is temporarily unavailable," manages expectations and prevents frustration.

10-Point Comparison of Software Deployment Best Practices

| Item | 🔄 Implementation complexity | ⚡ Resource & cost | 📊 Expected outcomes | 💡 Ideal use cases | ⭐ Key advantages |

|---|---|---|---|---|---|

| Automated Deployment Pipelines (CI/CD) | Medium–High 🔄: initial setup and test automation required | Moderate ⚡: CI runners, test infra, DevOps time | Faster, reliable deployments; fewer human errors 📊 | Frequent releases across multi-model services | Streamlined releases, audit trails, faster iteration ⭐ |

| Blue-Green Deployments | High 🔄: duplicate environment and sync complexity | High ⚡: doubled infra costs, DB sync overhead | Near-zero downtime; immediate rollback capability 📊 | Critical production updates needing zero downtime | Safe rollbacks and production-like validation ⭐ |

| Canary Deployments | Medium–High 🔄: traffic routing + monitoring complexity | Moderate ⚡: monitoring, release coordination tools | Gradual rollout with real-world validation and early issue detection 📊 | Testing AI model changes with a subset of users | Limits blast radius; collects live performance data ⭐ |

| Infrastructure as Code (IaC) | Medium 🔄: learning curve and version discipline | Low–Moderate ⚡: IaC tooling and repo management | Consistent, reproducible environments; faster provisioning 📊 | Multi-region infra and environment parity for AI services | Reproducibility, collaboration, disaster recovery ⭐ |

| Containerization and Orchestration | High 🔄: Kubernetes operational and networking complexity | Moderate–High ⚡: orchestration clusters, image registry | Scalable, consistent deployments; autoscaling and resilience 📊 | Multi-component AI services needing independent scaling | Portability, autoscaling, self-healing deployments ⭐ |

| Feature Flags & Progressive Rollouts | Low–Medium 🔄: simple toggles to governance needs | Low ⚡: feature management platform or library | Controlled feature exposure; safe experimentation and rollbacks 📊 | Gradual AI capability releases and A/B testing | Decouples release from deploy; quick rollback ⭐ |

| Monitoring, Logging & Observability | Medium–High 🔄: instrumentation, dashboards, alerting | Moderate–High ⚡: storage, agents, visualization tooling | Faster incident diagnosis, capacity planning, improved reliability 📊 | Tracking model latency/errors and multi-service traces | Actionable insights, reduced MTTR, informed SLOs ⭐ |

| Automated Testing, Coverage & Security Scanning | High 🔄: extensive test suites and security tooling maintenance | Moderate–High ⚡: compute for tests, scanners, tool licenses | Higher code quality, fewer regressions, earlier vulnerability detection 📊 | Validating AI outputs and preventing vulnerabilities pre-prod | Confidence in releases; catches regressions & security issues ⭐ |

| Database Migration & Versioning | Medium–High 🔄: careful migration, rollback and testing | Low–Moderate ⚡: migration tools, staging data environments | Safe schema evolution with traceable history; reduced data loss risk 📊 | Schema changes and data transformations across environments | Controlled migrations, rollback support, documented changes ⭐ |

| Graceful Degradation & Fallback Strategies | Medium 🔄: added fallback logic and extensive failure testing | Low–Moderate ⚡: caching, fallbacks, circuit breakers | Partial availability during failures; reduced cascading outages 📊 | Handling transient model outages or overloaded services | Improved resilience and user experience under failure ⭐ |

Ready to Deploy Like a Pro?

And there you have it, the modern playbook for shipping software without the late-night panic and stress sweats. We've navigated the landscape of software deployment best practices, from the foundational power of CI/CD pipelines to the surgical precision of canary deployments and the safety net of graceful degradation. It’s a lot to take in, but remember, this isn't an all-or-nothing exam. You don't have to implement everything overnight.

The journey from a "hope this works" deployment strategy to an "I know this works" mindset is incremental. It’s about building a culture of quality, safety, and velocity, one practice at a time. The real magic happens when these concepts start working together. Your automated tests in a CI pipeline give you the confidence to do a blue-green deployment. Your Infrastructure as Code scripts make spinning up that new environment a breeze. Your observability dashboards then tell you exactly how your canary release is performing in the wild.

From Theory to Reality: Your Next Steps

So, where do you start? Don't get overwhelmed trying to boil the ocean. Pick one area that feels like the biggest pain point for you or your team right now.

- Is every deployment a manual, error-prone marathon? Start by automating one small part of it. Set up a basic CI pipeline that runs your tests on every commit. That's a huge win.

- Worried about a new feature causing a widespread outage? Look into implementing feature flags. It’s a powerful way to de-risk your releases and gain fine-grained control over who sees what.

- Are your environments constantly out of sync? Dip your toes into Infrastructure as Code with a tool like Terraform or Pulumi. Start by codifying a single, simple piece of your infrastructure.

The core theme connecting all these practices is confidence. Confidence to ship faster, confidence to experiment, and confidence that when things inevitably go wrong, you have a plan to fix them quickly without impacting your users. Adopting these modern software deployment best practices isn't just about technical excellence; it's about building a more resilient business and a happier, less-stressed engineering team. You're not just deploying code; you're building a reliable, repeatable, and scalable engine for delivering value.

By focusing on automation, incremental rollouts, and robust monitoring, you transform deployments from a source of anxiety into a routine, predictable part of your development lifecycle. This frees up your team's mental energy to focus on what truly matters: innovating and building incredible products. And as your development and deployment workflows become more sophisticated, managing all the moving parts, from feature specs to research documents, becomes its own challenge. Streamlining this creative and organizational overhead is where the real velocity is unlocked.

Managing the creative and research phases that precede deployment can be just as complex as the deployment itself. If your team is struggling to keep documents, AI-powered workflows, and collaborative notes in one place, check out Zemith. It provides a unified workspace to streamline your entire innovation process, helping you organize the chaos so you can focus on building and deploying great software with confidence. Learn more and get started for free at Zemith.

Explore Zemith Features

Introducing Zemith

The best tools in one place, so you can quickly leverage the best tools for your needs.

All in One AI Platform

Go beyond AI Chat, with Search, Notes, Image Generation, and more.

Cost Savings

Access latest AI models and tools at a fraction of the cost.

Get Sh*t Done

Speed up your work with productivity, work and creative assistants.

Constant Updates

Receive constant updates with new features and improvements to enhance your experience.

Features

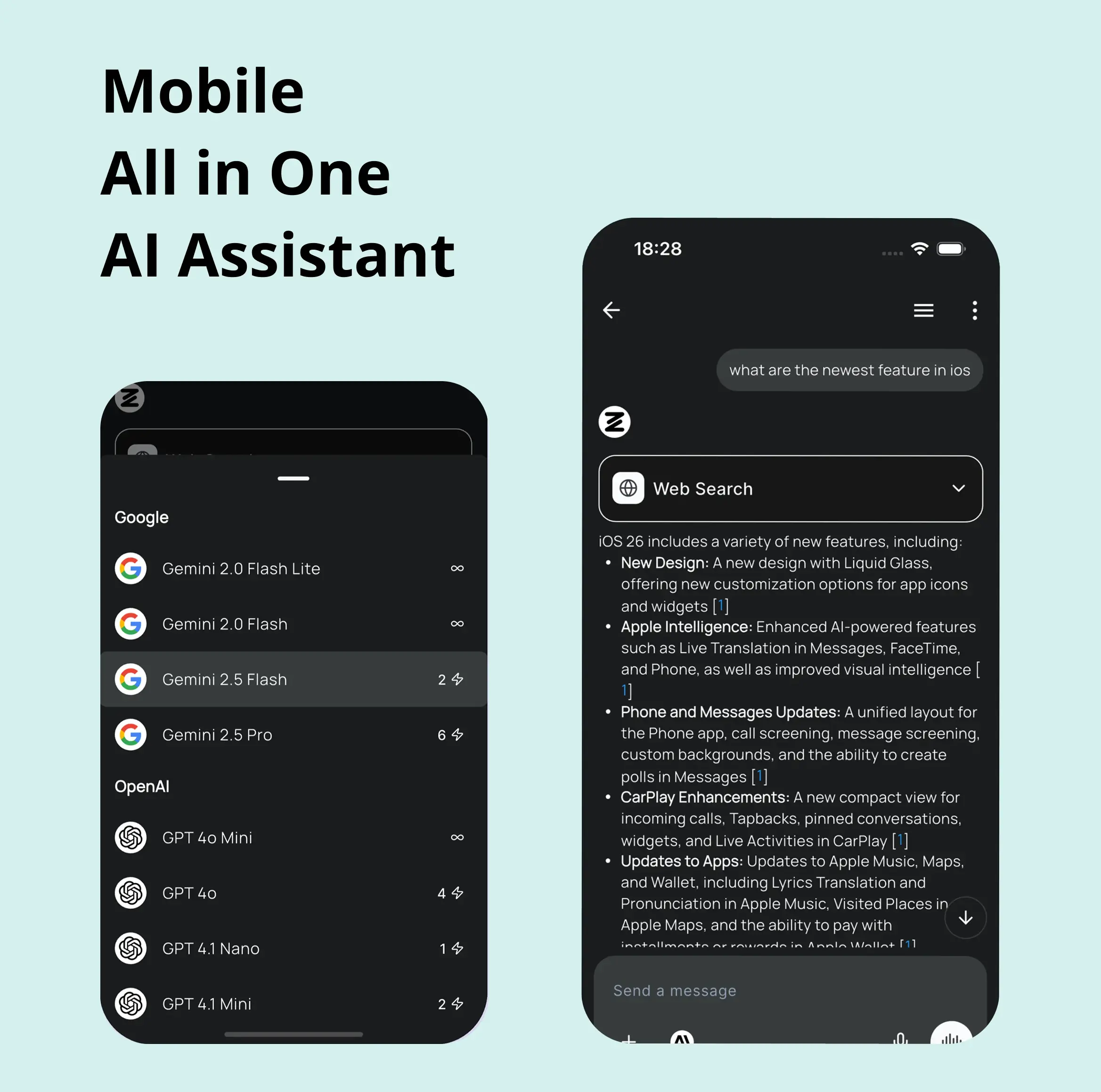

Selection of Leading AI Models

Access multiple advanced AI models in one place - featuring Gemini-2.5 Pro, Claude 4.5 Sonnet, GPT 5, and more to tackle any tasks

Speed run your documents

Upload documents to your Zemith library and transform them with AI-powered chat, podcast generation, summaries, and more

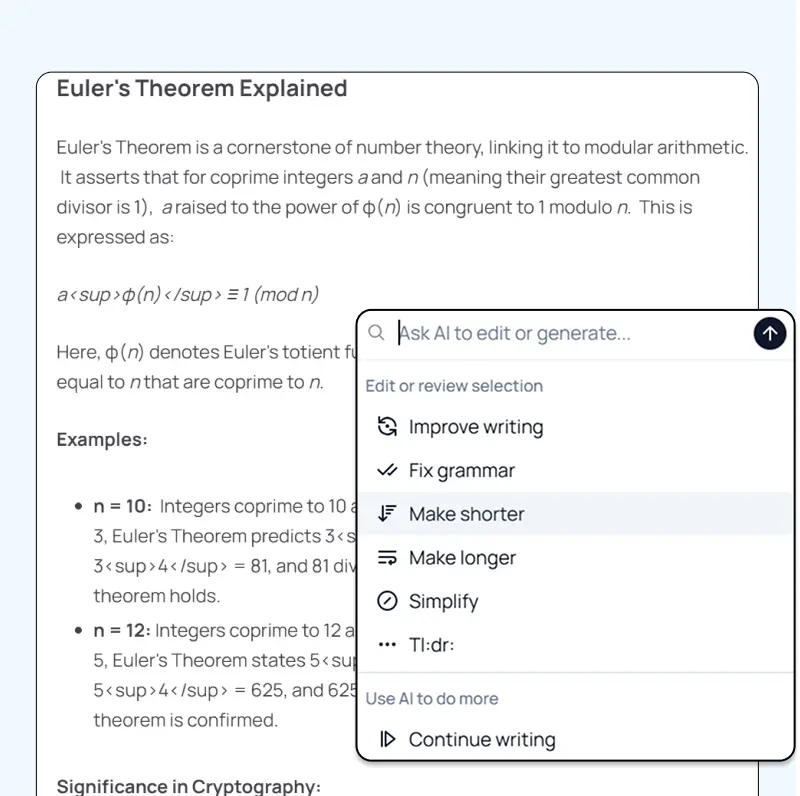

Transform Your Writing Process

Elevate your notes and documents with AI-powered assistance that helps you write faster, better, and with less effort

Unleash Your Visual Creativity

Transform ideas into stunning visuals with powerful AI image generation and editing tools that bring your creative vision to life

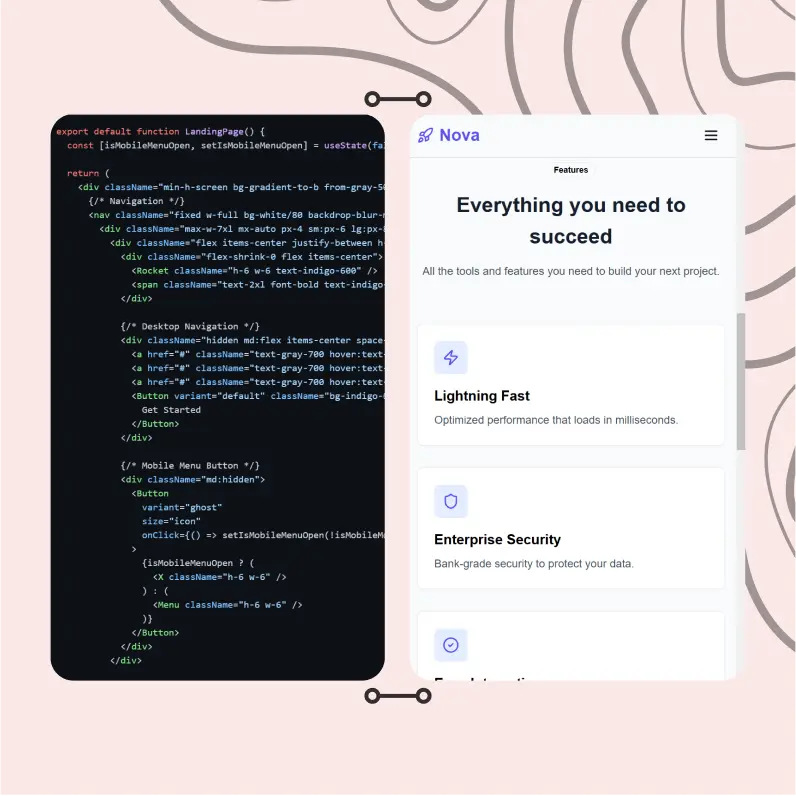

Accelerate Your Development Workflow

Boost productivity with an AI coding companion that helps you write, debug, and optimize code across multiple programming languages

Powerful Tools for Everyday Excellence

Streamline your workflow with our collection of specialized AI tools designed to solve common challenges and boost your productivity

Live Mode for Real Time Conversations

Speak naturally, share your screen and chat in realtime with AI

AI in your pocket

Experience the full power of Zemith AI platform wherever you go. Chat with AI, generate content, and boost your productivity from your mobile device.

Deeply Integrated with Top AI Models

Beyond basic AI chat - deeply integrated tools and productivity-focused OS for maximum efficiency

Straightforward, affordable pricing

Save hours of work and research

Affordable plan for power users

Plus

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

Professional

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

What Our Users Say

Great Tool after 2 months usage

simplyzubair

I love the way multiple tools they integrated in one platform. So far it is going in right dorection adding more tools.

Best in Kind!

barefootmedicine

This is another game-change. have used software that kind of offers similar features, but the quality of the data I'm getting back and the sheer speed of the responses is outstanding. I use this app ...

simply awesome

MarianZ

I just tried it - didnt wanna stay with it, because there is so much like that out there. But it convinced me, because: - the discord-channel is very response and fast - the number of models are quite...

A Surprisingly Comprehensive and Engaging Experience

bruno.battocletti

Zemith is not just another app; it's a surprisingly comprehensive platform that feels like a toolbox filled with unexpected delights. From the moment you launch it, you're greeted with a clean and int...

Great for Document Analysis

yerch82

Just works. Simple to use and great for working with documents and make summaries. Money well spend in my opinion.

Great AI site with lots of features and accessible llm's

sumore

what I find most useful in this site is the organization of the features. it's better that all the other site I have so far and even better than chatgpt themselves.

Excellent Tool

AlphaLeaf

Zemith claims to be an all-in-one platform, and after using it, I can confirm that it lives up to that claim. It not only has all the necessary functions, but the UI is also well-designed and very eas...

A well-rounded platform with solid LLMs, extra functionality

SlothMachine

Hey team Zemith! First off: I don't often write these reviews. I should do better, especially with tools that really put their heart and soul into their platform.

This is the best tool I've ever used. Updates are made almost daily, and the feedback process is very fast.

reu0691

This is the best AI tool I've used so far. Updates are made almost daily, and the feedback process is incredibly fast. Just looking at the changelogs, you can see how consistently the developers have ...