7 Best LLM Models of 2025: Your Ultimate Guide

Tired of juggling AI tools? We break down the 7 best LLM models of 2025, from OpenAI to Claude, with actionable insights to help you choose the right one.

It feels like a new, world-changing LLM drops every other Tuesday, right? One minute you're getting the hang of GPT-4, the next there's a new model from Google, Anthropic, or a startup you've never heard of that promises to write your emails, code your app, and maybe even walk your dog. (Okay, maybe not the dog walking... yet.) The AI explosion is exciting, but it's also… a lot. Keeping track of the best LLM models, their unique strengths, and their often-confusing pricing tiers can feel like a full-time job. You're trying to build something amazing, not spend your days deciphering API documentation.

That's where this guide comes in. We’re cutting through the noise to give you the real scoop on the top 7 players in the game: OpenAI, Anthropic, Google, AWS, Microsoft Azure, Hugging Face, and NVIDIA. For each one, we’ll break down what makes them tick, their ideal use cases, and exactly how to get started, complete with screenshots and direct links. To truly appreciate what these models can do, it helps to have a solid foundation in understanding Natural Language Processing (NLP), the core technology that powers them.

But here’s a thought: what if you didn't have to pick just one? What if you could access the best of all these models in a single, streamlined workspace without juggling a dozen subscriptions? That’s where platforms like Zemith are flipping the script, letting you leverage multiple AIs from one place. Let's dive in and find the right model (or models) to bring your masterpiece to life.

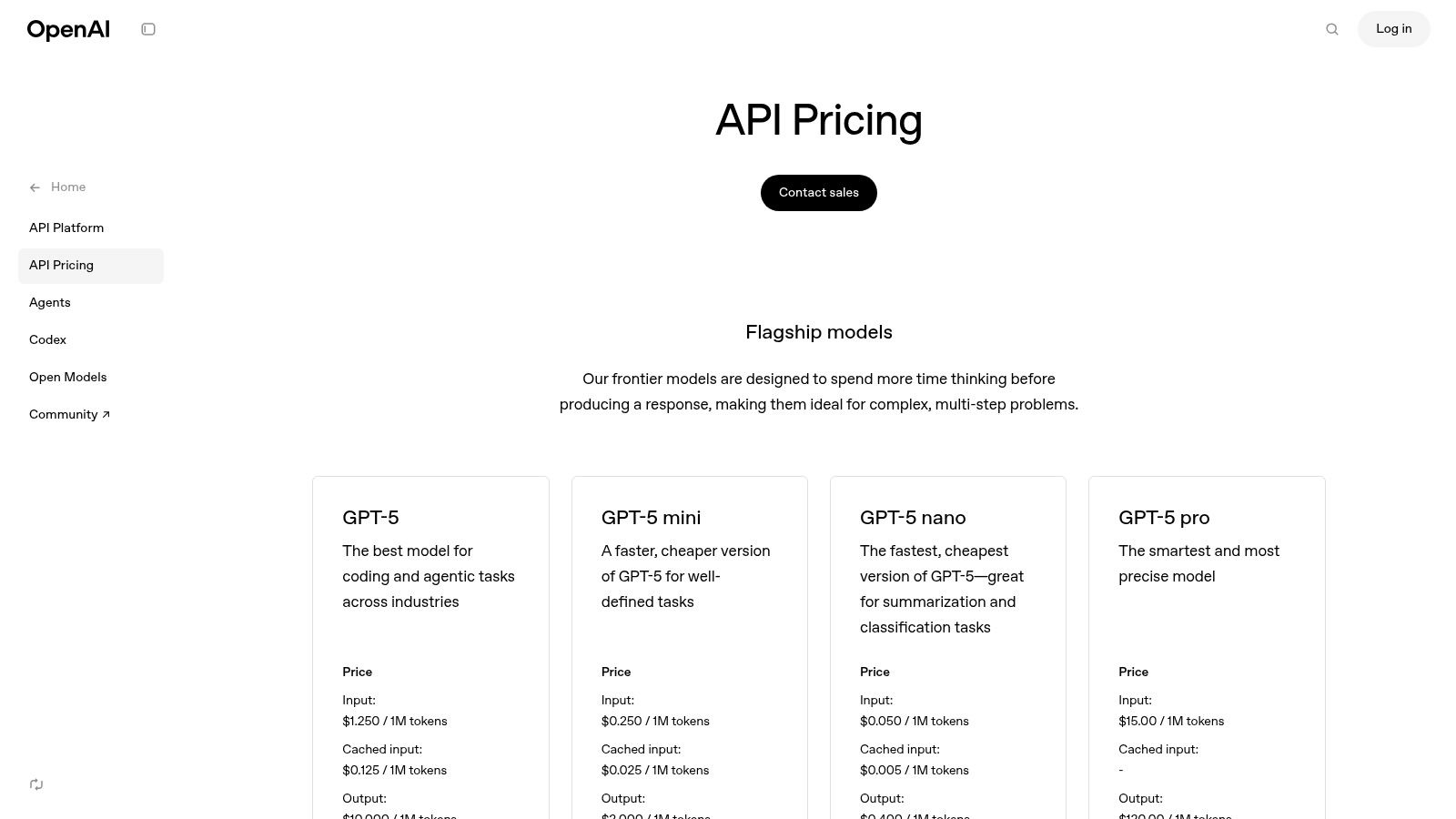

1. OpenAI (GPT Series): The One That Started It All

When you hear "AI," there's a good chance OpenAI is the first name that pops into your head. They basically kicked the door down and brought large language models into the mainstream. Their platform is the official home for the entire GPT family of models, making it the go-to starting point for countless developers, creators, and businesses looking for some of the best LLM models available today.

It's not just about the flagship, super-powered models like GPT-4.1. OpenAI offers a whole buffet of options, from the lightning-fast o-series models designed for real-time applications to their multimodal models that can see, hear, and speak. This variety makes their platform incredibly versatile, whether you're building a simple chatbot or a complex AI agent that can handle multiple tasks.

What Makes OpenAI Stand Out?

The real magic of the OpenAI platform is its mature and well-documented ecosystem. Getting started is a breeze thanks to their clean APIs, comprehensive documentation, and official SDKs for Python and Node.js. You can go from signing up to making your first API call in minutes, not hours.

For more advanced users, the platform offers powerful features like fine-tuning, which lets you train a model on your own data for specialized tasks. Plus, with emerging tools like AgentKit, they're paving the way for more sophisticated, autonomous AI agents. To get the most out of these powerful models, mastering how you ask questions is key; a deep dive into how prompt engineering can unlock an AI's full potential on Zemith.com can seriously level up your results.

Key Features & Pricing

- Wide Model Selection: Access to various tiers, including the high-reasoning GPT-4.1, nimble

o-series, and Realtime models for low-latency speech. - Multimodality: Work with models that can process and generate text, images, and audio.

- Developer-First Tools: Robust API, clear documentation, fine-tuning capabilities, and an intuitive usage dashboard.

- Transparent Pricing: The pay-as-you-go model is based on token usage, with different rates for each model. For example,

gpt-4.1is more expensive than the faster, cheapergpt-o-mini.

Pros and Cons

| Pros | Cons |

|---|---|

| ✅ Best-in-class performance for a wide range of tasks | ❌ Costs can escalate quickly for high-volume applications |

| ✅ Mature, well-documented developer ecosystem and SDKs | ❌ Access to the newest models can be staggered or waitlisted |

| ✅ Straightforward API and easy onboarding for new users | ❌ Some advanced features, like Assistants, have separate costs per call |

Ultimately, OpenAI provides a powerful, reliable, and accessible foundation. It's an excellent choice if you're looking for top-tier general performance and a smooth development experience, making it a benchmark against which other LLM providers are often measured.

Website: https://openai.com/api/pricing/

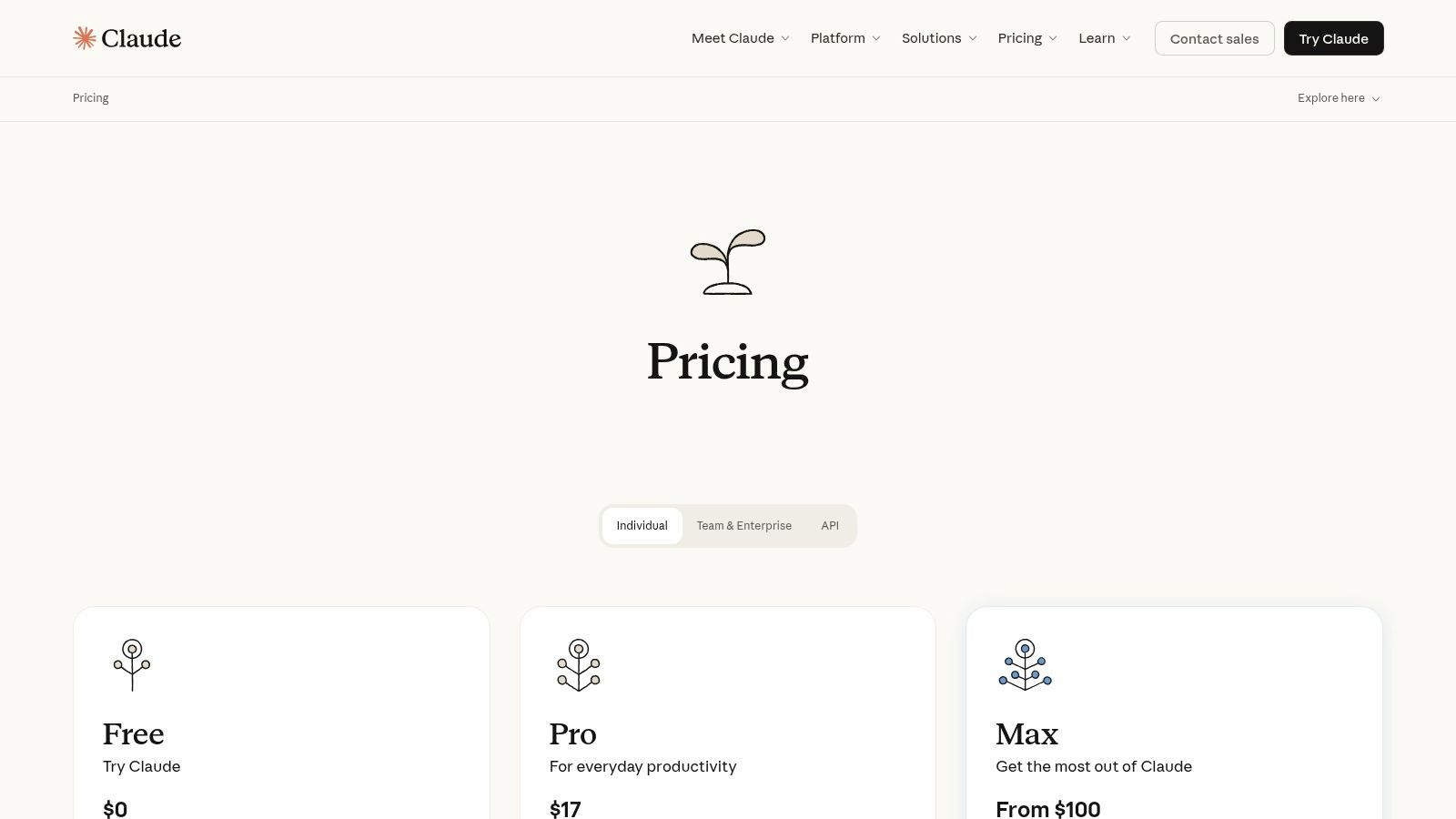

2. Anthropic (Claude): The Safety-Conscious Powerhouse

If OpenAI is the one that kicked the door down, Anthropic is the one that came in and made sure everything was thoughtfully organized and safety-checked. Born from a focus on AI safety, Anthropic's Claude family of models has rapidly become a top contender, particularly for tasks requiring nuanced understanding, reliability, and the ability to process enormous amounts of information.

The platform offers a tiered family of models: the super-smart Claude 3 Opus, the balanced and speedy Claude 3 Sonnet, and the lightning-fast Claude 3.5 Sonnet. This structure allows users to pick the perfect tool for the job, whether it's deep analysis or real-time customer support. Their reputation for producing some of the best LLM models for business analysis is built on a foundation of responsible AI development and impressive performance.

What Makes Anthropic Stand Out?

Anthropic's killer feature is its massive context window. With the ability to handle up to 200,000 tokens (that’s like a 500-page book), Claude is the undisputed king of long-document analysis. You can drop in entire codebases, financial reports, or research papers and ask complex questions, a task that would choke many other models. This makes it an absolute beast for legal, financial, and academic use cases.

The platform is also incredibly developer-friendly, offering a clean API and a new "Batch API" that provides a significant 50% discount for asynchronous, high-volume workloads. This focus on both performance and cost-efficiency makes it a scalable choice. And because Claude is designed to be more "helpful and harmless," it often follows complex instructions with greater precision and is less prone to generating problematic outputs, which is a huge plus for enterprise applications.

Key Features & Pricing

- Massive Context Window: Industry-leading 200K token context window allows for deep analysis of very large documents.

- Tiered Model Family: Choose between Opus (high-reasoning), Sonnet (balanced speed/performance), and the newer 3.5 Sonnet (fast and intelligent) to fit your needs.

- Cost-Saving APIs: A standard API with per-token pricing and a Batch API offering around a 50% discount for non-urgent tasks.

- Enterprise-Ready: Available on major cloud platforms like AWS Bedrock and Google Cloud Vertex AI for easy procurement and integration.

Pros and Cons

| Pros | Cons |

|---|---|

| ✅ Unmatched ability to analyze and reason over very long documents | ❌ Higher usage limits and advanced features are gated behind paid plans |

| ✅ Excellent at following complex, multi-step instructions | ❌ Pricing and model availability can vary on third-party cloud services |

| ✅ Strong focus on safety and reliability in its outputs | ❌ The ecosystem is newer and slightly less mature than OpenAI's |

In summary, Anthropic's Claude is the go-to choice when your primary need is processing vast amounts of text with a high degree of accuracy and safety. For anyone building applications that involve summarizing legal documents, analyzing financial reports, or reviewing code, Claude offers a powerful and reliable toolkit that’s hard to beat.

Website: https://claude.com/pricing

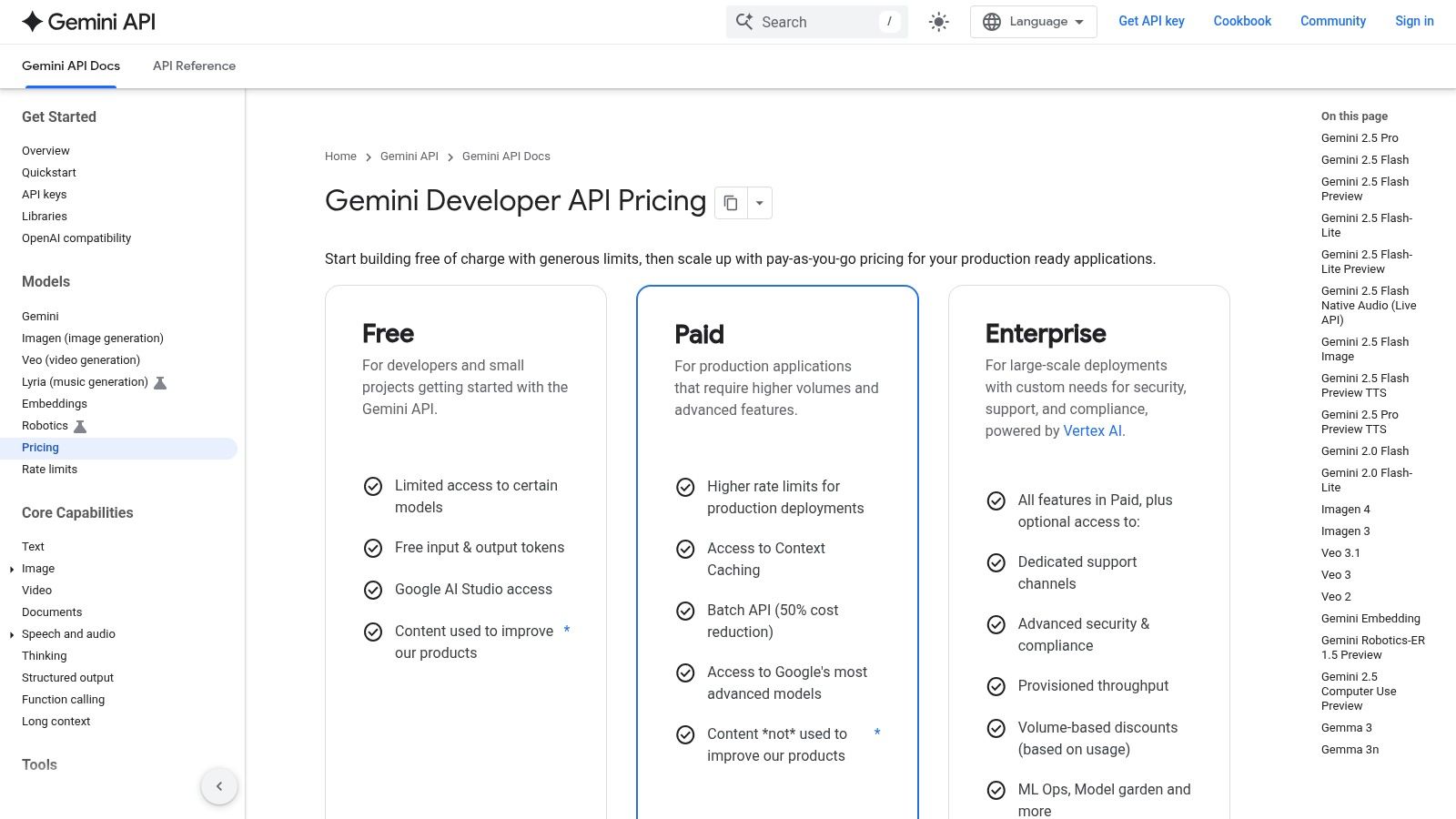

3. Google AI Studio (Gemini): The Multimodal Powerhouse

If OpenAI started the party, Google arrived with a massive speaker system and a laser light show. Google AI Studio is the developer-centric playground and API gateway for their powerful Gemini family of models. It's where you can tap into some of the most advanced multimodal and long-context capabilities on the market, positioning it as a serious contender for the title of best LLM models provider.

Google’s approach is all about integrating its massive data and search infrastructure directly into its AI. This means Gemini models don't just generate text; they can natively understand and process video, audio, and images while also "grounding" their responses with real-time information from Google Search. This makes them exceptionally powerful for tasks that require up-to-the-minute accuracy and complex, multi-format reasoning.

What Makes Google AI Studio Stand Out?

The standout feature is Gemini's jaw-dropping context window. Models like Gemini 2.5 boast a 1 million token context window, which is like giving the AI an entire novel to read and remember for a single conversation. This completely changes the game for summarizing massive documents, analyzing codebases, or processing lengthy videos without losing track of details.

The platform is also incredibly approachable. The Google AI Studio web console allows for rapid prototyping and prompt tuning without writing a single line of code. When you’re ready to build, the API is straightforward, and the free tier is generous enough to let you experiment and build a proof-of-concept without pulling out your credit card. This blend of cutting-edge tech and accessibility makes it a fantastic choice for developers looking to push the boundaries of what's possible with AI.

Key Features & Pricing

- Massive Context Window: Process and reason over up to 1 million tokens (and sometimes more in private preview) with models like Gemini 2.5.

- Native Multimodality: Handle text, images, audio, and video inputs seamlessly within the same model.

- Grounding with Google Search: Enhance model accuracy by connecting responses to live search results for fact-checking and up-to-date information.

- Generous Free Tier: A substantial free quota of requests per minute makes it easy to get started and experiment.

- Cost-Saving Features: Context caching allows you to reuse prompts and reduce token costs for repeated queries.

Pros and Cons

| Pros | Cons |

|---|---|

| ✅ Industry-leading context window for long-form analysis | ❌ Some advanced features like grounding have separate pricing |

| ✅ Excellent price-to-performance ratio for multimodal tasks | ❌ Production deployments can feel tied to the Google Cloud ecosystem |

| ✅ Generous free tier is perfect for prototyping and learning | ❌ The model lineup can feel less straightforward than competitors |

In the end, Google AI Studio is the place to be if your project involves massive amounts of data or requires a deep understanding of multiple media formats. For those building the next wave of natural language processing applications detailed on Zemith.com, Gemini offers a toolkit that can handle almost any data you throw at it.

Website: https://ai.google.dev/pricing

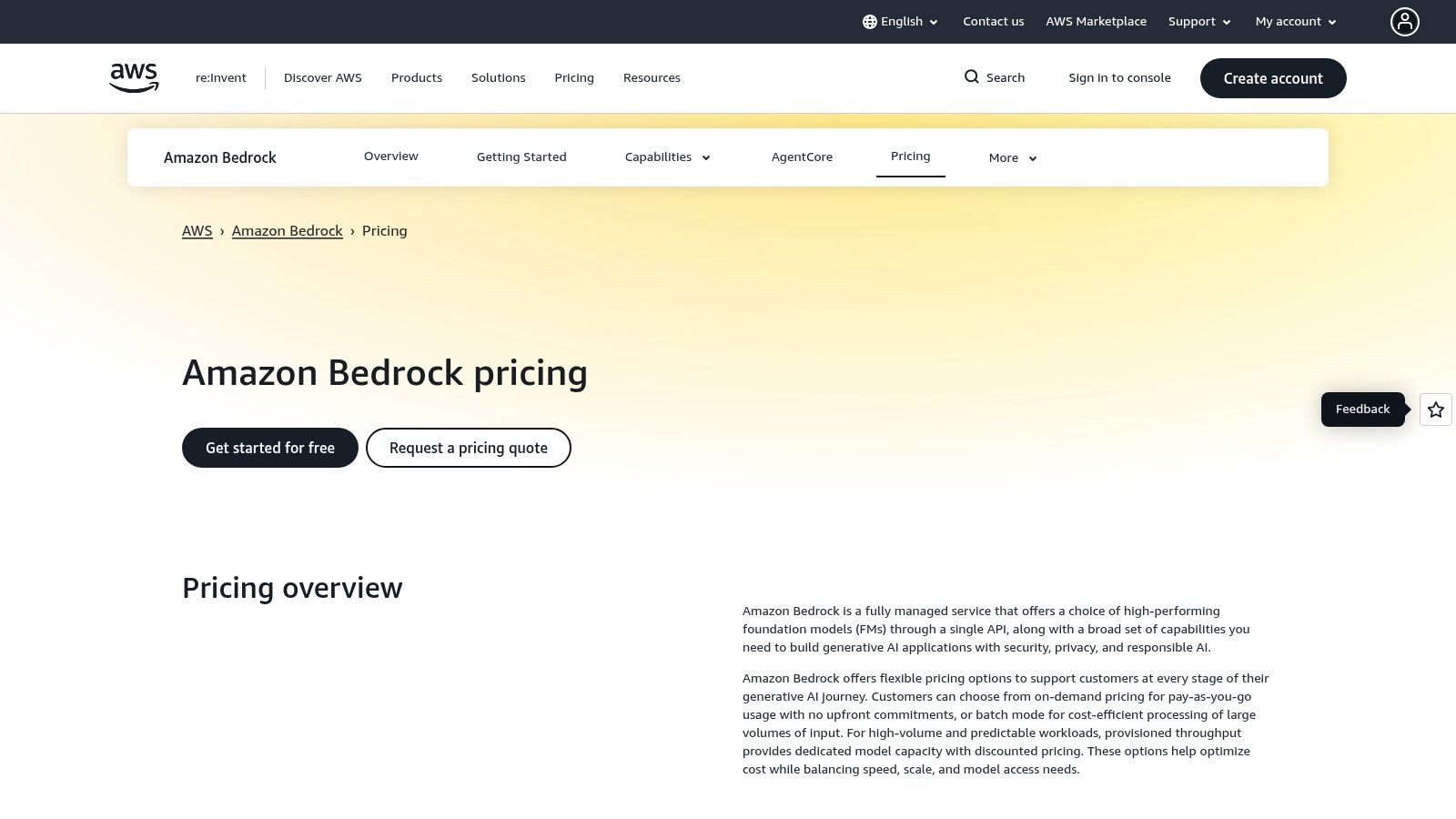

4. AWS Amazon Bedrock: The Enterprise AI Supermarket

If your company already lives and breathes in the AWS ecosystem, Amazon Bedrock is less of a new tool and more of a natural extension of your existing cloud setup. It’s not a single model provider but a managed service that acts like a supermarket for some of the best LLM models from various leading AI labs. You get access to models from Anthropic (Claude), Meta (Llama), Mistral, and Amazon’s own Titan family, all under one roof.

This approach is perfect for enterprises that need to experiment with different models without juggling multiple APIs, contracts, and billing cycles. Bedrock wraps everything in the familiar AWS security and governance blanket, making it a much easier sell to your IT and security teams. You can switch from Claude 3 Sonnet to Llama 3 with a simple API parameter change, making A/B testing a breeze.

What Makes AWS Bedrock Stand Out?

The killer feature of Bedrock is its deep integration with the AWS ecosystem. Imagine plugging a powerful LLM directly into your S3 buckets, Lambda functions, and VPCs without any complex networking hoops. This makes it incredibly powerful for building scalable, secure, and private AI applications that leverage your existing cloud infrastructure.

Beyond just offering models, Bedrock provides tools to build on top of them. You can fine-tune models on your proprietary data, create Retrieval-Augmented Generation (RAG) pipelines with Knowledge Bases, and build agents that can execute tasks across your company's systems. This unified platform approach is a huge advantage for companies looking to go beyond simple text generation and implement robust AI solutions. To see how this fits into a bigger picture, exploring the top AI workflow automation tools on Zemith.com can reveal how Bedrock powers complex, automated processes.

Key Features & Pricing

- Multi-Provider Model Access: A diverse catalog featuring models from Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon.

- Unified API: A single API to access and experiment with models from different providers, simplifying development and testing.

- Enterprise-Grade Security: Integrates with AWS IAM for access control, VPC for private networking, and KMS for data encryption.

- Flexible Pricing: Offers on-demand (pay-as-you-go), provisioned throughput (for guaranteed performance at a fixed cost), and batch inference modes.

Pros and Cons

| Pros | Cons |

|---|---|

| ✅ Centralized billing and governance within the AWS ecosystem | ❌ Pricing can be complex, with different rates for each model and mode |

| ✅ Easily A/B test and swap models from various top providers | ❌ Best pricing often requires a commitment via provisioned throughput |

| ✅ Strong security, privacy, and compliance controls | ❌ You might not get access to a provider's newest model immediately |

Ultimately, Amazon Bedrock is the pragmatic choice for businesses already invested in AWS. It trades the absolute cutting-edge, day-one access of a direct provider for enterprise-grade stability, security, and the convenience of a one-stop shop for powerful AI models.

Website: https://aws.amazon.com/bedrock/pricing/

5. Microsoft Azure AI Foundry (Model Catalog)

For organizations already deep in the Microsoft ecosystem, Azure AI Foundry is less a choice and more a natural extension. It’s a unified hub that brings a massive catalog of models from providers like OpenAI, xAI (Grok), Meta, Mistral, and many others directly into the Azure environment you already know and trust. This isn't just about offering variety; it's about providing a clear, secure, and governed path from a cool AI prototype to a fully deployed, production-ready application.

Azure AI Foundry essentially acts as a superstore for some of the best LLM models on the market, but with Microsoft's enterprise-grade wrapper. You can experiment with Llama 3 for creative text generation, then switch to Grok for a more conversational, real-time feel, all through a consistent set of tools and APIs. This "Models-as-a-Service" approach simplifies procurement, billing, and security, making it a powerhouse for businesses that need to scale.

What Makes Azure AI Foundry Stand Out?

The killer feature here is seamless integration with the broader Azure stack. Your AI model is no longer an isolated project; it's a first-class citizen within your existing cloud infrastructure. This means you can easily connect it to Azure Functions, Logic Apps, and other services while benefiting from enterprise must-haves like private networking, role-based access control (RBAC), and Microsoft’s service-level agreements (SLAs).

This integration streamlines complex workflows, such as feeding documents from a blob storage into a powerful model for analysis. For businesses looking to automate paperwork, this setup can be a game-changer, creating a direct pipeline for exploring how intelligent document processing software works on Zemith.com. The ability to manage everything under a single Azure subscription simplifies billing and lets you leverage existing procurement agreements, a huge plus for corporate finance teams.

Key Features & Pricing

- Massive Model Catalog: Access to thousands of models, including those from OpenAI, Meta, Mistral, and more, all under one roof.

- Enterprise Governance: Built-in security with private networking, RBAC, and Microsoft SLAs for production environments.

- Unified Deployment: Choose between serverless endpoints for pay-as-you-go flexibility or provisioned throughput for predictable performance at scale.

- Integrated Billing: Consolidates all model usage costs into your existing Azure subscription and billing cycle.

Pros and Cons

| Pros | Cons |

|---|---|

| ✅ Top-tier enterprise security, governance, and private networking | ❌ Pricing is fragmented and can be complex, varying by model provider |

| ✅ Seamless integration with the entire Microsoft Azure ecosystem | ❌ Some models or features may have regional availability restrictions |

| ✅ Simplifies procurement and billing for existing Azure customers | ❌ The sheer number of options can feel overwhelming for newcomers |

Ultimately, Azure AI Foundry is the go-to choice for businesses committed to the Microsoft cloud. It trades some of the simplicity of a standalone provider for unparalleled enterprise control, security, and a clear, integrated path to production.

Website: https://azure.microsoft.com/en-us/products/ai-foundry/

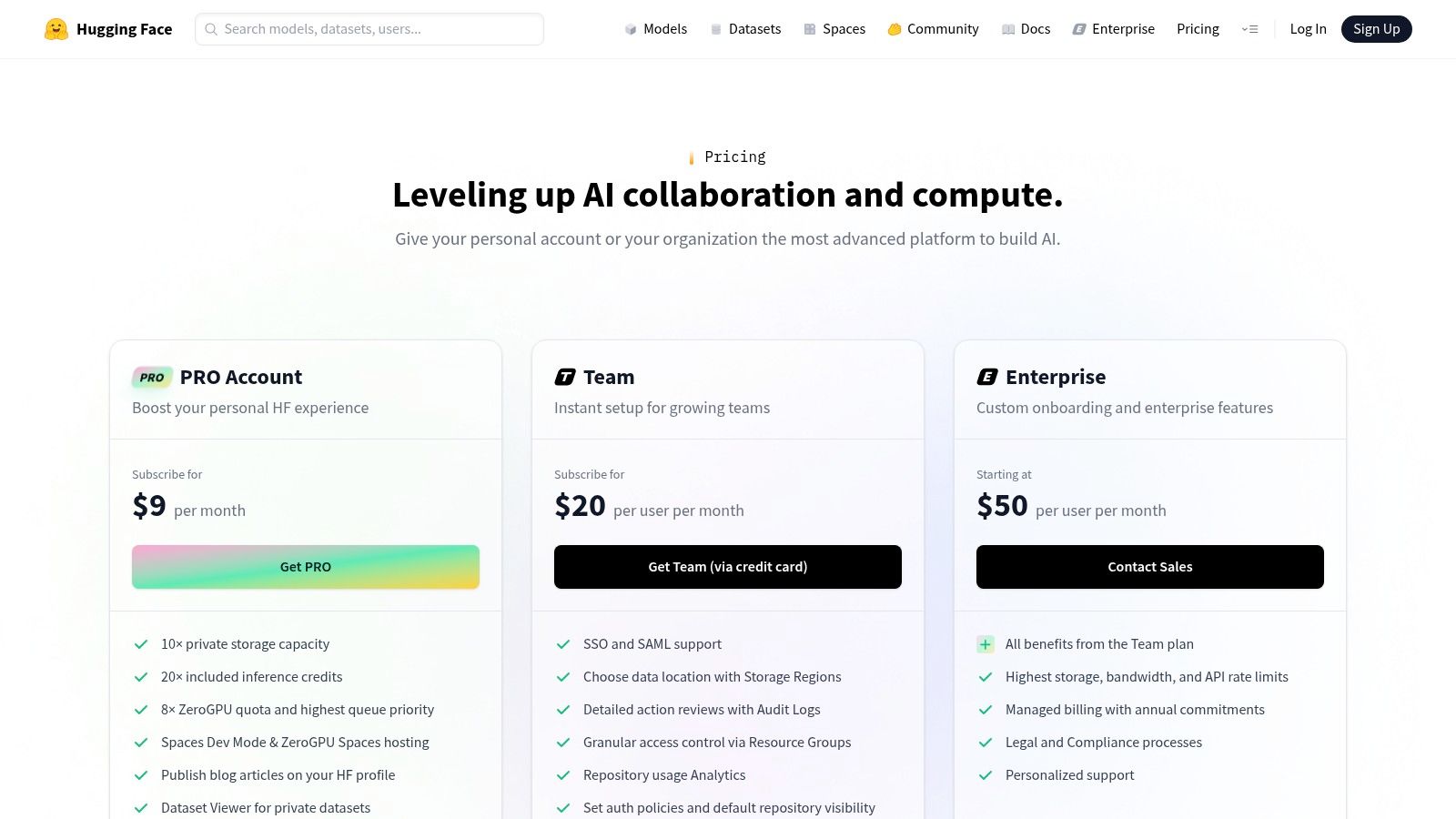

6. Hugging Face: The Open-Source LLM Community Hub

If proprietary models are the polished, all-inclusive resorts of the AI world, Hugging Face is the bustling, vibrant open-air market. It's not just a platform; it's the de facto home for the open-source AI community. This is where you'll find a staggering collection of models like Llama, Mistral, Gemma, and Qwen, all contributed, evaluated, and improved upon by a global network of developers.

Hugging Face has become the definitive playground for anyone looking to experiment with a wide array of the best open source LLM models without being tied to a single corporate ecosystem. It’s where you go to browse, download, and test thousands of models, check out community-driven performance leaderboards, and even deploy them for your own applications with just a few clicks. It’s less of a single product and more of a comprehensive toolkit for all things open-source AI.

What Makes Hugging Face Stand Out?

The core strength of Hugging Face is its community-centric approach. The platform provides all the infrastructure needed to discover, evaluate, and deploy models. You can easily see how different models stack up on various benchmarks, read discussions from other users, and access everything from the model weights to the training artifacts. This transparency is a massive departure from the closed-off nature of proprietary providers.

For developers ready to move beyond experimentation, Hugging Face offers powerful tools like Inference Endpoints and Spaces. Inference Endpoints let you deploy a model on dedicated, auto-scaling infrastructure with transparent, hourly pricing for CPUs and GPUs. Spaces allows you to quickly build and share interactive demos of your models. This combination makes it one of the most powerful and flexible AI tools for developers looking to leverage the open-source movement.

Key Features & Pricing

- Massive Model Repository: An unparalleled library of open-source models with community leaderboards and detailed model cards.

- Managed Inference Endpoints: Easily deploy models for production use cases with dedicated, auto-scaling hardware.

- Spaces & Demos: A simple way to build and share interactive applications and demos powered by your chosen models.

- Transparent Infrastructure Pricing: You pay for the underlying hardware (CPU/GPU) on an hourly basis, giving you direct control over cost and performance.

Pros and Cons

| Pros | Cons |

|---|---|

| ✅ Unmatched access to a vast and diverse range of open-source LLMs | ❌ You are responsible for managing cost-performance hardware trade-offs |

| ✅ Strong community, transparent benchmarks, and robust ecosystem | ❌ Support and compliance depend on the specific model's license and maintainer |

| ✅ Clear, infrastructure-based pricing for deployments | ❌ Can have a steeper learning curve than a simple, proprietary API |

In short, Hugging Face is the essential destination for anyone serious about working with open-source AI. It provides the freedom, flexibility, and community support needed to explore the cutting edge of language models and turn them into real-world applications.

Website: https://huggingface.co/pricing

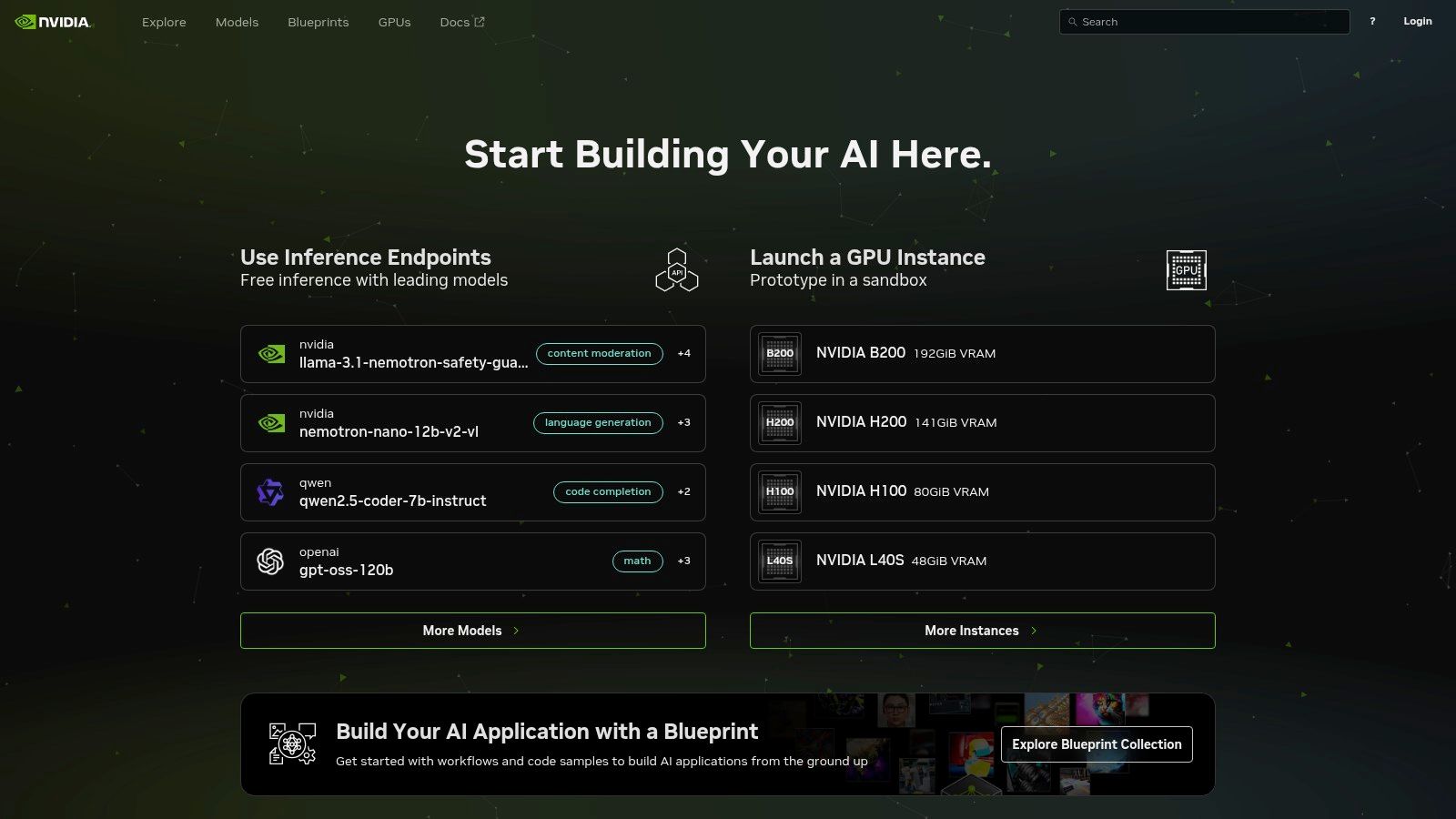

7. NVIDIA API Catalog (NIM microservices): The 'Run Anywhere' Powerhouse

If you think NVIDIA is just about the graphics cards that power your gaming rig, think again. They're a titan in the AI hardware space, and their API Catalog is their way of bringing optimized, production-ready large language models directly to developers. This isn't just another API endpoint; it's a collection of powerful, containerized inference microservices (NIMs) that you can run literally anywhere you have NVIDIA GPUs, from the cloud to your own data center.

The platform offers a curated selection of some of the best LLM models from across the ecosystem, including popular open-source choices like Llama 3, Mixtral, and Gemma 2. Each model is pre-packaged into a NIM, which is essentially a plug-and-play container that's been hyper-optimized for NVIDIA hardware. This approach gives businesses ultimate control over their data and infrastructure while still benefiting from top-tier performance.

What Makes the NVIDIA API Catalog Stand Out?

The core differentiator here is portability and performance. Instead of being locked into a single cloud provider's API, NVIDIA lets you take the models with you. You can start by prototyping on their hosted endpoints (powered by DGX Cloud) for free as an NVIDIA Developer member, and when you're ready for production, you can download the exact same container and deploy it on-premise or in your private cloud. It’s the ultimate "try before you buy" for serious enterprise AI.

This model provides unparalleled flexibility for companies with strict data privacy, security, or latency requirements. The NIMs come with a standard, OpenAI-compatible API, making it surprisingly simple to switch your existing applications over. You get the performance benefits of running on specialized hardware without having to completely re-architect your software. This focus on deployment flexibility is what sets NVIDIA apart from a purely API-based provider.

Key Features & Access

- Portable NIMs: Containerized microservices let you deploy top models like Llama 3 and Mixtral consistently across any NVIDIA-powered infrastructure.

- Optimized Performance: Models are fine-tuned to deliver the lowest latency and highest throughput possible on NVIDIA GPUs.

- Hosted Endpoints: Free trial access on NVIDIA's DGX Cloud for developers to quickly test and prototype without setting up any hardware.

- Enterprise-Ready: A clear path from free development to a production-grade, self-hosted deployment with an NVIDIA AI Enterprise license for support.

Pros and Cons

| Pros | Cons |

|---|---|

| ✅ Superior performance and low latency on NVIDIA GPUs | ❌ Production self-hosting typically requires an NVIDIA AI Enterprise license |

| ✅ Unmatched portability across cloud and on-premise data centers | ❌ Hosted trial endpoints have rate limits and are not for production use |

| ✅ Free prototyping for NVIDIA Developer Program members | ❌ Can have a higher total cost of ownership (hardware + license) |

Ultimately, the NVIDIA API Catalog is for teams that need control, performance, and a clear path to self-hosting. If your application demands minimal latency and you operate within the NVIDIA ecosystem, this platform provides an incredibly powerful and flexible way to deploy the best LLM models on your own terms.

Website: https://build.nvidia.com/

Top 7 LLM Platforms Comparison

| Platform | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes ⭐ | Ideal Use Cases 📊 | Key Advantages 💡 |

|---|---|---|---|---|---|

| OpenAI | Low–Medium: well-documented APIs/SDKs; straightforward onboarding | Moderate: cloud APIs; costs scale with throughput and realtime features | High ⭐⭐⭐: reliable general performance, multimodal and reasoning models | Production apps, agents, multimodal features, rapid developer onboarding | Best-in-class models, fine-tuning, mature developer ecosystem |

| Anthropic (Claude) | Low–Medium: standard API surface; tiered plans for higher limits | Moderate: token pricing; long-context usage increases cost | High ⭐⭐⭐: excellent instruction-following and safer defaults | Safety-sensitive assistants, very long-context tasks, enterprise usage | Safer behavior by default, very large context windows, simple per-token pricing |

| Google AI Studio (Gemini) | Medium: web console and APIs; pairs best with Google Cloud for production | Moderate–High: free tiers for experiment; grounding/Live APIs add costs | High ⭐⭐⭐: strong multimodal and search-grounded capabilities, huge context | Hybrid reasoning, multimodal apps, search‑grounded assistants, prototyping | Very large context (up to ~1M tokens), grounding with Google Search, generous free tier |

| AWS Amazon Bedrock | Medium–High: integrates with AWS security/compliance; unified multi-model API | High: AWS infra, model-dependent pricing, provisioned throughput options | High ⭐⭐⭐: enterprise-ready governance and multi-vendor flexibility | Enterprises on AWS needing centralized billing, A/B testing, compliance | Centralized billing/IAM/VPC controls, broad multi-model catalog |

| Microsoft Azure AI Foundry | Medium–High: Azure-native deployment, serverless endpoints and RBAC setup | High: Azure subscription, region/model-specific availability, provisioned options | High ⭐⭐⭐: enterprise-grade security and production deployment paths | Organizations standardized on Microsoft stack, regulated environments | Enterprise security, private networking, Microsoft SLAs and integration |

| Hugging Face | Low–Medium: easy to explore and deploy; selecting infra for production required | Variable: choose CPU/GPU/TPU instances; transparent infra pricing | Variable ⭐⭐: depends on model choice and deployment configuration | Rapid prototyping, open-source model experimentation, community benchmarks | Massive model hub, community evals, Spaces for demos, transparent infra costs |

| NVIDIA API Catalog (NIMs) | Medium–High: containerized "run anywhere" NIMs; orchestration needed for scale | High: optimized for NVIDIA GPUs; may require AI Enterprise license for prod | High ⭐⭐⭐: optimized for low latency and throughput on NVIDIA hardware | High-performance inference, on‑prem/cloud GPU deployments, portable workloads | NVIDIA-optimized performance, portable containers, enterprise support paths |

Stop Juggling, Start Creating: Unify Your AI Workflow

Whew. We've just navigated a whirlwind tour of the AI landscape, from OpenAI's powerhouse models and Anthropic's constitutional approach to the massive model catalogs from Google, AWS, Microsoft, Hugging Face, and NVIDIA. It's clear that the debate over the single "best LLM model" is kind of a trick question.

The real answer is, "It depends." The best model for drafting a Python script isn't necessarily the best for summarizing a 200-page legal discovery document or brainstorming a viral marketing campaign. Each model we've explored has its own unique genius, its own specific flavor of artificial intelligence.

The Real Challenge: From Model Selection to Workflow Sanity

Choosing the right tool for the job is one thing. But what happens when your project requires the strengths of multiple models? You find yourself trapped in a productivity nightmare I like to call "AI Tab Hell."

You're paying for multiple subscriptions, juggling a half-dozen API keys, and wearing out your CTRL+C and CTRL+V keys pasting prompts and responses between browser tabs. This constant context-switching kills your creative flow and turns a powerful AI workflow into a clunky, disjointed mess. It’s like trying to cook a gourmet meal using a different kitchen for each ingredient.

The Big Takeaway: The ultimate bottleneck isn’t the power of the models themselves, but the fragmented way we're forced to access them. True productivity comes from integration, not just selection. This is precisely the problem platforms like Zemith are built to solve.

Your Action Plan: How to Choose and Implement Your AI Stack

So, what are your next steps? How do you move from analysis paralysis to actually building something amazing with these tools?

Define Your Core Task: Before anything else, get brutally specific about your primary goal. Is it code generation? Long-form content creation? Data analysis? This single decision will narrow your choices from seven to maybe two or three top contenders.

Run a Small-Scale "Bake-Off": Don't commit to a single model based on benchmarks alone. Take a real-world task you do every day and run it through your top two choices. Give them the exact same prompt and see which one delivers a result that's closer to your desired output. You'll learn more in 15 minutes of hands-on testing than in hours of reading reviews.

Consider the Ecosystem (Or Don't!): Remember that these models don't exist in a vacuum. If your team is already heavily invested in AWS, Amazon Bedrock offers a seamless integration path. Or, you can bypass this lock-in entirely. Instead of tying yourself to one ecosystem, consider a unified platform like Zemith which lets you cherry-pick the best models from every provider without getting bogged down in platform-specific tools. Understanding the best LLM models is a great start, but to truly leverage AI, it's also helpful to explore other essential AI tools that can complement your workflow for everything from video creation to project management.

The Future is Unified, Not Scattered

The era of picking one "winner" is over. The future of productive AI work lies in using the right model for the right micro-task, all within a single, unified environment. Imagine using Claude's massive context window to feed a document, having a GPT model write code based on that context, and then using a Gemini model to fact-check the output against the latest web results, all without ever leaving your project dashboard.

This isn't a far-off dream; it's the new standard for efficient, creative, and technical work. It’s time to stop juggling and start creating.

Ready to ditch the tab-chaos and consolidate your AI workflow into one powerful app? Zemith brings the best LLM models, document analysis, coding assistants, and more into a single, cohesive workspace. Stop paying for five different tools and start creating in a true SuperAI App by visiting Zemith today.

Explore Zemith Features

Introducing Zemith

The best tools in one place, so you can quickly leverage the best tools for your needs.

All in One AI Platform

Go beyond AI Chat, with Search, Notes, Image Generation, and more.

Cost Savings

Access latest AI models and tools at a fraction of the cost.

Get Sh*t Done

Speed up your work with productivity, work and creative assistants.

Constant Updates

Receive constant updates with new features and improvements to enhance your experience.

Features

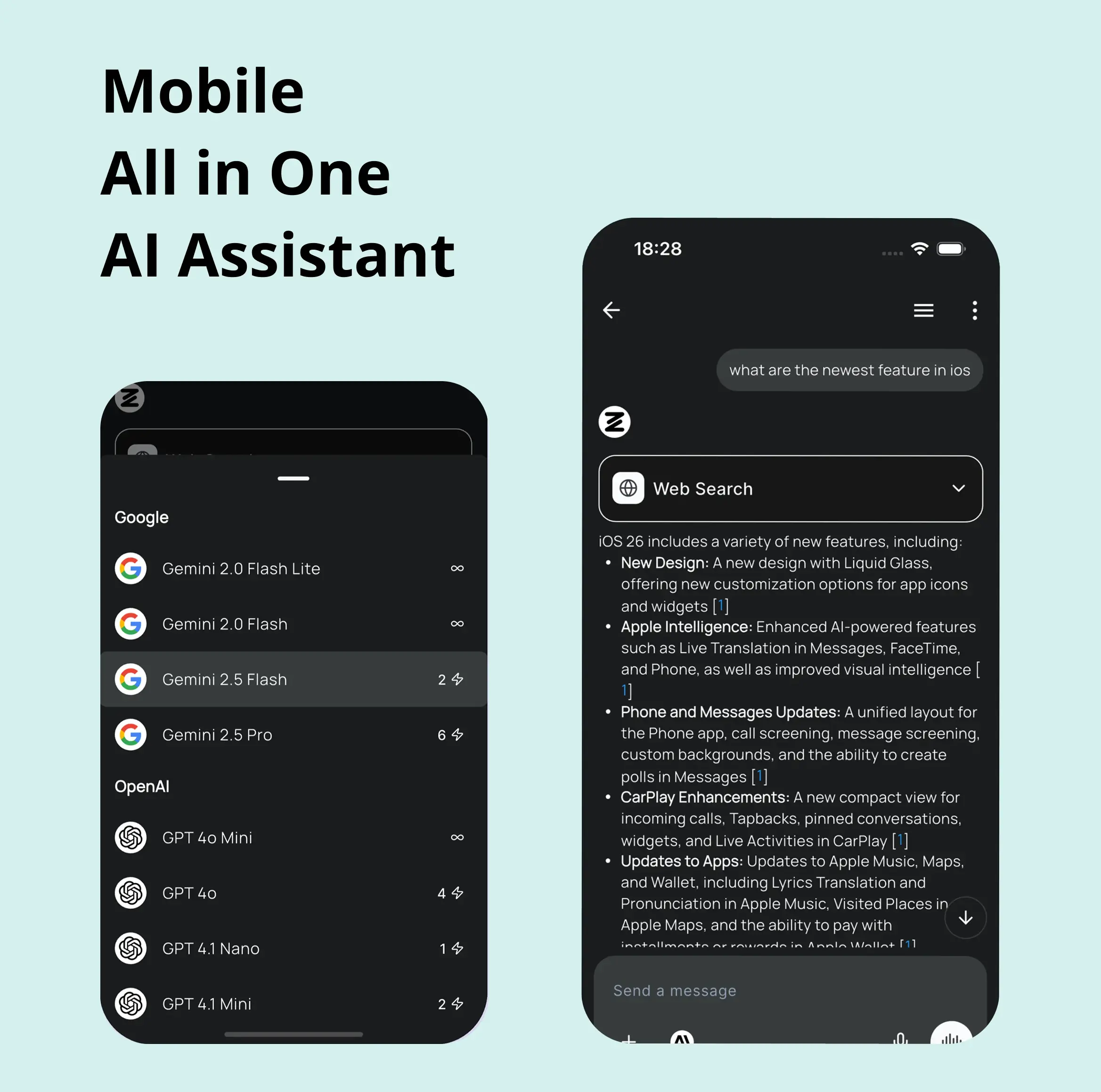

Selection of Leading AI Models

Access multiple advanced AI models in one place - featuring Gemini-2.5 Pro, Claude 4.5 Sonnet, GPT 5, and more to tackle any tasks

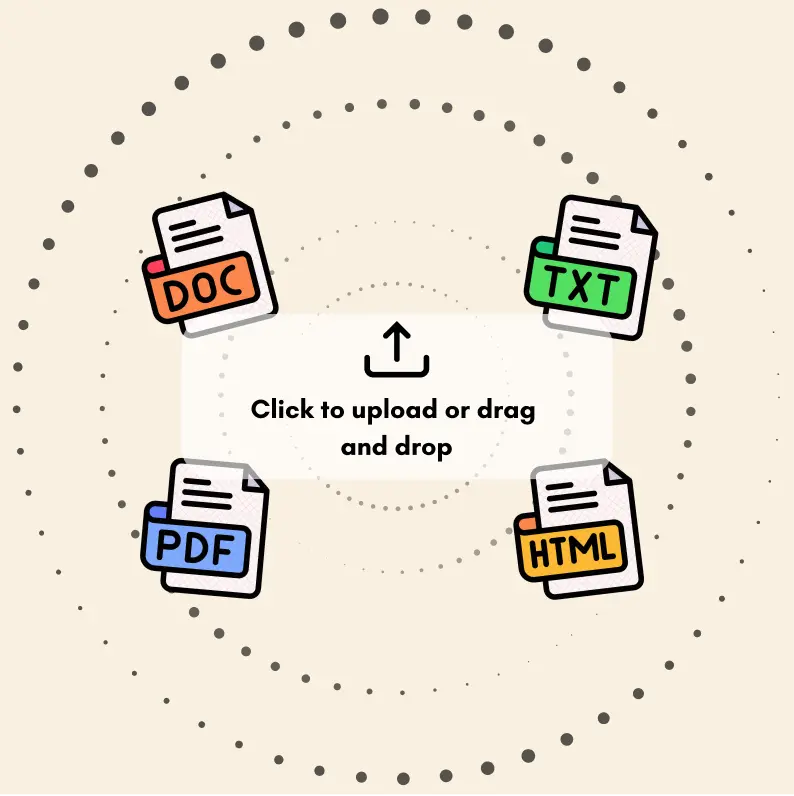

Speed run your documents

Upload documents to your Zemith library and transform them with AI-powered chat, podcast generation, summaries, and more

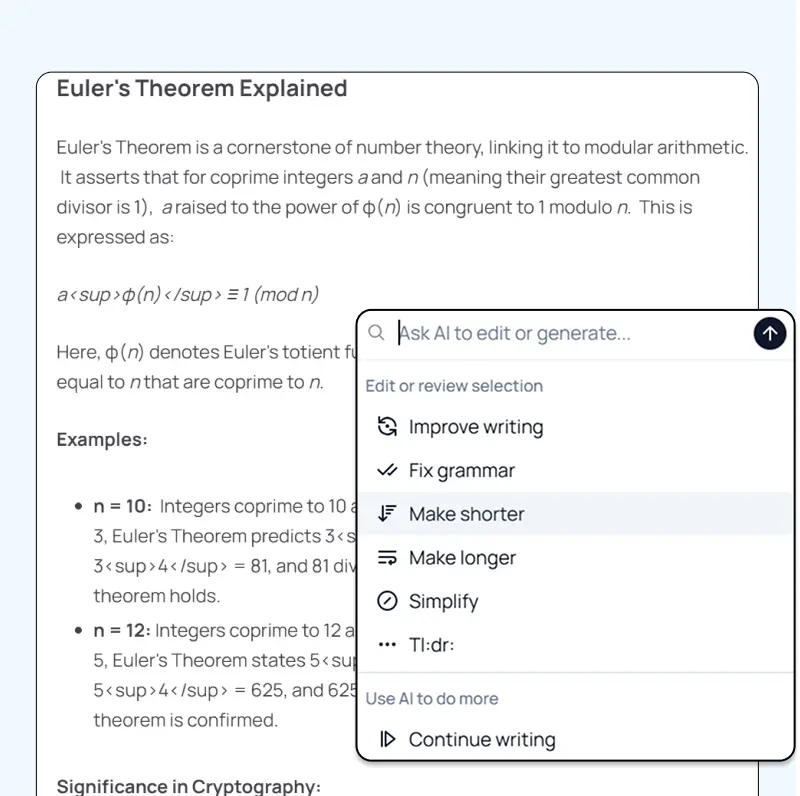

Transform Your Writing Process

Elevate your notes and documents with AI-powered assistance that helps you write faster, better, and with less effort

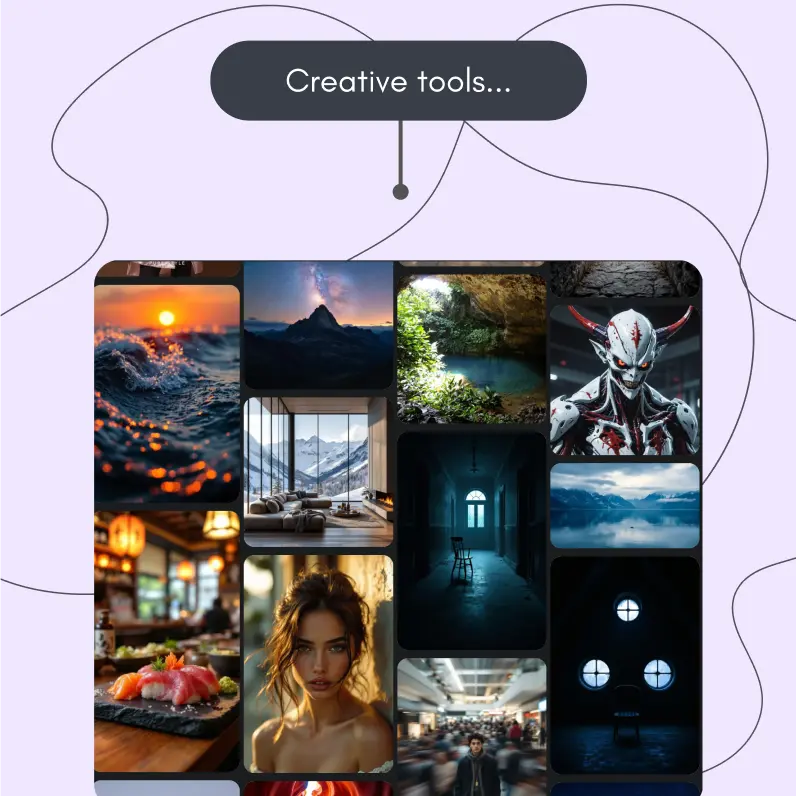

Unleash Your Visual Creativity

Transform ideas into stunning visuals with powerful AI image generation and editing tools that bring your creative vision to life

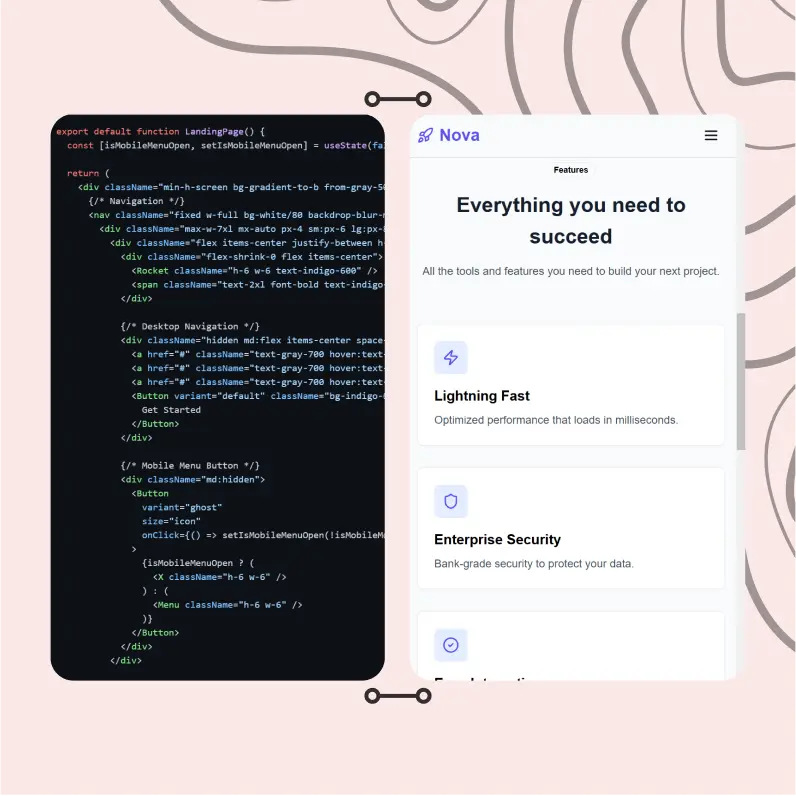

Accelerate Your Development Workflow

Boost productivity with an AI coding companion that helps you write, debug, and optimize code across multiple programming languages

Powerful Tools for Everyday Excellence

Streamline your workflow with our collection of specialized AI tools designed to solve common challenges and boost your productivity

Live Mode for Real Time Conversations

Speak naturally, share your screen and chat in realtime with AI

AI in your pocket

Experience the full power of Zemith AI platform wherever you go. Chat with AI, generate content, and boost your productivity from your mobile device.

Deeply Integrated with Top AI Models

Beyond basic AI chat - deeply integrated tools and productivity-focused OS for maximum efficiency

Straightforward, affordable pricing

Save hours of work and research

Affordable plan for power users

Plus

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

Professional

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

What Our Users Say

Great Tool after 2 months usage

simplyzubair

I love the way multiple tools they integrated in one platform. So far it is going in right dorection adding more tools.

Best in Kind!

barefootmedicine

This is another game-change. have used software that kind of offers similar features, but the quality of the data I'm getting back and the sheer speed of the responses is outstanding. I use this app ...

simply awesome

MarianZ

I just tried it - didnt wanna stay with it, because there is so much like that out there. But it convinced me, because: - the discord-channel is very response and fast - the number of models are quite...

A Surprisingly Comprehensive and Engaging Experience

bruno.battocletti

Zemith is not just another app; it's a surprisingly comprehensive platform that feels like a toolbox filled with unexpected delights. From the moment you launch it, you're greeted with a clean and int...

Great for Document Analysis

yerch82

Just works. Simple to use and great for working with documents and make summaries. Money well spend in my opinion.

Great AI site with lots of features and accessible llm's

sumore

what I find most useful in this site is the organization of the features. it's better that all the other site I have so far and even better than chatgpt themselves.

Excellent Tool

AlphaLeaf

Zemith claims to be an all-in-one platform, and after using it, I can confirm that it lives up to that claim. It not only has all the necessary functions, but the UI is also well-designed and very eas...

A well-rounded platform with solid LLMs, extra functionality

SlothMachine

Hey team Zemith! First off: I don't often write these reviews. I should do better, especially with tools that really put their heart and soul into their platform.

This is the best tool I've ever used. Updates are made almost daily, and the feedback process is very fast.

reu0691

This is the best AI tool I've used so far. Updates are made almost daily, and the feedback process is incredibly fast. Just looking at the changelogs, you can see how consistently the developers have ...