How Audio to Text AI Unlocks Your Voice Data

Discover how audio to text AI technology works, what impacts its accuracy, and how you can use it to transform spoken words into valuable, searchable data.

Think about all the audio your business generates daily. Every client meeting, webinar, support call, and brainstorming session creates a mountain of valuable data. But for most companies, that value stays locked away, unheard and untapped.

This guide is about finding the key. We'll explore how audio-to-text AI transforms those fleeting spoken words into structured, searchable, and incredibly useful text.

From Sound Waves to Searchable Data

We're going to pull back the curtain on the technology that makes this conversion possible. You'll see why it’s moved from a niche gadget to an essential tool for any business looking to gain a serious competitive edge.

Along the way, we'll look at how platforms like Zemith put this power right at your fingertips. The goal? To help you uncover critical insights, find new efficiencies, and make information more accessible for everyone in your organization.

The Growing Demand for Voice Data

The move towards automated transcription isn't just a minor trend—it's a massive market shift. The Speech-to-Text API market is expected to jump from an estimated USD 4.42 billion in 2025 to USD 8.57 billion by 2030. That’s nearly double in just five years, highlighting a huge demand for tools that can reliably turn voice into data.

This explosive growth is fueled by major breakthroughs in artificial intelligence, making transcription tools more accurate and practical than ever for real-world business needs. If you want to get into the nitty-gritty of how it all works, this guide to voice to text AI technology is a great starting point.

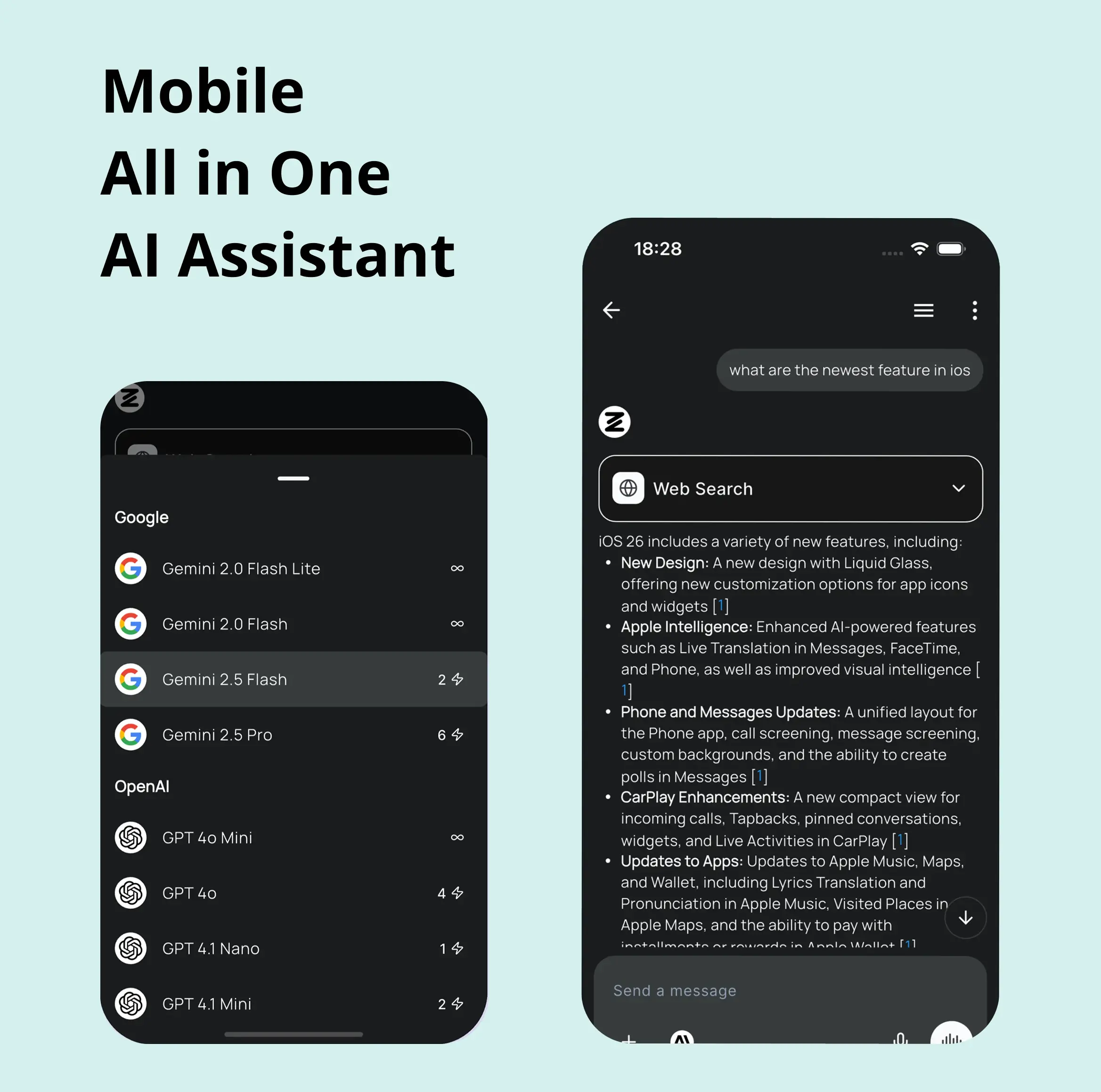

You can see how a platform like Zemith packages these complex functions into a clean, intuitive interface.

Having a centralized hub like this means you can manage, search, and analyze all your transcribed audio in one place. With Zemith, you eliminate the need to juggle multiple apps, creating a single source of truth for all your voice data.

The real magic of audio-to-text AI is its ability to turn unstructured, temporary conversations into a permanent, searchable, and analyzable knowledge base. It’s about making every spoken word count.

How AI Learns to Understand Human Speech

Ever wonder what happens in that split second between you speaking into a device and the words appearing on the screen? It's not magic, but a fascinating, high-speed process where a computer is taught to listen, process, and make sense of human language. Think of it as teaching a machine to hear not just sounds, but the meaning behind them.

This whole process is called Automatic Speech Recognition (ASR), and it's the engine that powers modern transcription. It’s a complex system that breaks your speech down into its tiniest parts and then cleverly reassembles them into text. This is the foundational technology platforms like Zemith use to deliver the remarkably fast and accurate transcripts businesses rely on today.

So, let's pull back the curtain and see how it really works.

The Acoustic Model: Breaking Down Sounds

First up is the Acoustic Model. Its job is to listen to the raw audio—the soundwave itself—and chop it up into the smallest units of sound that differentiate one word from another. In linguistics, these are called phonemes.

For instance, the word "cat" is made of three distinct phonemes: the "k" sound, the "æ" sound, and the "t" sound. An Acoustic Model is trained on thousands upon thousands of hours of recorded speech to get incredibly good at recognizing these specific sounds, no matter who is talking. It learns to identify them across different accents, pitches, and speaking speeds. This is the first, fundamental layer of understanding—turning a jumble of sound into a neat sequence of phonetic building blocks.

The better the Acoustic Model is at this initial step, the more accurate the final transcript will be. This analysis lays the critical groundwork for what comes next.

The Language Model: Predicting the Right Words

Once the audio is converted into a string of phonemes, the Language Model steps in. This is where things get really smart, bringing context and probability into the mix. The Language Model's job is to figure out the most likely sequence of words based on the phonemes the Acoustic Model identified.

It works a lot like your own brain. If you hear someone say, "The quick brown fox jumps over the lazy...", you instantly fill in the blank with "dog." The Language Model does the same thing, just on a massive scale. It has analyzed enormous libraries of text to learn which words and phrases tend to show up together.

A strong Language Model is what separates a basic, clumsy transcription from an intelligent one. It’s how the AI can tell the difference between "to," "too," and "two"—not by the sound alone, but by understanding the words around them. This ensures the final text is not just phonetically plausible but grammatically and contextually correct.

This predictive power is a huge part of what makes audio to text AI so effective. By recognizing linguistic patterns, it can make highly educated guesses to reconstruct speech with impressive accuracy. To see how this extends beyond just transcription, you can explore the many powerful applications of Natural Language Processing that drive today's most useful AI tools.

The Decoding Process: Assembling the Final Text

The final stage is Decoding. This is where everything comes together. The decoder takes the output from both the Acoustic and Language Models and acts like a final editor, weighing all the possibilities to construct the most probable sentence.

It looks at the phoneme sequence from the Acoustic Model and compares it against the likely word combinations suggested by the Language Model. The process often involves mapping out a complex web of potential word paths and then calculating which path is most likely to be correct.

This infographic gives a simple view of how these technical steps translate into real business value.

As the visual shows, a smooth transcription process built on these models leads directly to better accuracy and significant cost savings. By integrating these complex systems, a platform like Zemith can turn spoken words into a valuable, searchable business asset in near real-time.

What Determines Transcription Accuracy

Ever wondered why one AI transcription comes back nearly perfect, while another is a jumbled mess? The truth is, not all AI transcriptions are created equal. The difference between a clean, usable document and hours of frustrating edits often boils down to just a few key factors.

Think of it like trying to have a conversation. If someone is mumbling from across a loud room, you’ll struggle to catch every word. An audio to text AI works on the exact same principle. The journey from spoken word to accurate text is all about the quality of the signal it receives.

The Foundation of Accuracy: Audio Quality

The single biggest influence on transcription accuracy is the quality of the source audio. It’s a simple case of "garbage in, garbage out." No matter how sophisticated an AI model is, it can't accurately transcribe what it can't clearly "hear."

Here’s what really matters:

Background Noise: A humming air conditioner, street traffic, or even the clatter of a coffee shop can seriously confuse an AI. These sounds mask the speaker's voice, leading to bizarre errors—like a cough being transcribed as an actual word.

Microphone Quality: This one’s a biggie. A professional-grade microphone captures a crisp, rich sound signal. Your built-in laptop or phone mic, on the other hand, often produces thin, distorted audio. Better hardware gives the AI much more clean data to analyze.

Speaker Proximity: The distance between the speaker and the microphone is crucial. A voice that's too far away sounds weak and muffled, making it incredibly difficult for the AI to process.

If you want top-tier results, start by controlling your recording environment. Minimizing background noise and using a decent microphone are two simple steps that pay massive dividends in accuracy.

The table below shows just how much of a difference audio quality can make. Notice how a clear input leads to a readable transcript, while a poor one results in gibberish.

Impact of Audio Quality on AI Transcription Accuracy

As you can see, the quality of your source file is the foundation for everything else. Taking a few moments to ensure a clean recording can save you hours of editing later.

Human Factors: Speaker Characteristics

Beyond the technical setup, the way people actually speak introduces another layer of complexity. Human speech is wonderfully diverse, but that variety can either help or hinder an audio to text AI.

Some of the most common human factors include:

- Accents and Dialects: AI models are trained on huge speech datasets, but they can still be thrown off by regional accents they haven't encountered often. A strong, unfamiliar accent can cause the error rate to climb.

- Speaking Pace: Talking too fast often causes words to blur together, making it tough for the AI to separate them. On the flip side, speaking unnaturally slowly with long, awkward pauses can disrupt the AI’s flow.

- Overlapping Speech: This is the ultimate stress test for any transcription service. When multiple people talk at once, their audio signals get tangled, almost always resulting in jumbled, incomplete text.

The ultimate goal is to provide the AI with the clearest signal possible. By encouraging speakers to talk one at a time and at a moderate pace, you give the transcription engine a much better chance of success, turning a potentially chaotic recording into a clean, organized script.

The Challenge of Specialized Language

Finally, the vocabulary itself can be a major roadblock. Most AI models are fantastic at transcribing everyday conversation, but they often trip up on specialized terminology.

Imagine a medical lecture full of anatomical terms, a legal proceeding with specific case law, or a tech conference discussing software frameworks. Words like "pharmacokinetics," "subpoena duces tecum," or "Kubernetes" just aren't part of the standard vocabulary.

This is where a truly powerful platform proves its worth. A basic transcription tool will likely mangle this jargon, leaving you with a nonsensical transcript. But an advanced solution like Zemith lets you create custom vocabularies. You can "teach" the AI your industry-specific terms, unique product names, or company acronyms ahead of time. This simple step trains the model to recognize and transcribe them perfectly, every time. For professionals who rely on precise documentation, this feature is an absolute game-changer.

How Businesses Are Putting Audio-to-Text AI to Work

The real test of any technology isn't what it can do, but what it's actually doing for people. In the case of audio-to-text AI, the impact is huge. It’s turning mountains of old audio files—once just sitting in archives—into a searchable goldmine of insights. Companies are finally moving past the slow, tedious work of manual transcription to unlock efficiencies they could only dream of before.

This isn't just about saving a little time; it's a fundamental shift in strategy. The market for Audio AI recognition is expected to explode from an estimated USD 5.23 billion in 2024 to over USD 19.63 billion by 2033. This growth isn't just hype—it’s driven by real-world adoption in sectors like media, healthcare, and finance, where AI is making everything from content production to patient documentation faster and smarter. You can dive deeper into this growth and what's behind it in the full audio AI recognition report.

For any business, getting on board with a platform like Zemith isn't just about keeping pace. It's about setting the pace.

Speeding Up Media and Content Creation

If you're a journalist or a content creator, you know that speed is everything. The clock is always ticking when you're trying to break a story or get a podcast out. Manually transcribing a single one-hour interview can easily eat up four or five hours—a massive bottleneck that holds up the whole creative process.

This is where audio-to-text AI completely flips the script.

- Fast-Track Interview Transcription: A journalist can drop an interview recording into Zemith and get a complete, accurate transcript back in minutes. This means they can instantly find the best quotes, check facts, and start writing their story.

- Building Searchable Archives: Media organizations can convert decades of audio and video into a massive text database. Imagine a reporter using Zemith to instantly search every interview they've ever done for a specific keyword.

- Getting More from Your Content: A marketer can use Zemith to take a single webinar recording, transcribe it, and spin it into a dozen different assets—blog posts, social media clips, and email newsletters—multiplying its value instantly.

With a tool like Zemith, a media team can centralize its audio content, making it all searchable and ready to use. It becomes a shared brain for the whole team, ensuring no great quote or critical detail ever gets lost again.

Making Legal and Compliance Workflows More Efficient

The legal world is built on documentation. Every single word from a deposition, a client meeting, or a courtroom matters, and it has to be recorded perfectly. For years, that meant relying on expensive and slow human transcription services.

Audio-to-text AI offers a much faster and more secure way forward. Legal teams are now using it to create searchable, time-stamped text records of all their conversations, which dramatically speeds up how they prepare for and review cases.

Think about it: by turning audio into structured text, a lawyer can search for a specific phrase across thousands of hours of deposition recordings in seconds. That's a game-changer for finding evidence and building a solid case.

Platforms like Zemith are especially powerful here because they come with enterprise-level security, keeping sensitive client information locked down. Plus, the ability to quickly transcribe and analyze client calls helps firms prove they're meeting compliance rules with a clear, verifiable record of communication.

Finding Hidden Customer and Market Insights

What are your customers really trying to tell you? Your support calls, sales meetings, and feedback sessions are packed with honest, unfiltered opinions about your products, your competitors, and what the market wants next. The problem has always been how to make sense of all that unstructured audio data.

This is where audio-to-text AI steps in as a powerful business intelligence tool.

- Analyze Customer Sentiment: Transcribe your support calls in Zemith, and you can instantly analyze the text to see how customers are feeling. Are they happy? Frustrated? You can spot common problems before they blow up.

- Gather Product Feedback: Marketers can transcribe user calls. This gives them a direct line to recurring feature requests and pain points, feeding valuable intel straight to the development team.

- Track Competitor Mentions: Sales teams can search call transcripts to find out how often competitors are mentioned and why. This is raw, real-time competitive intelligence that you can't get anywhere else.

Many companies are now exploring voice-activated technologies for insights to pull this kind of valuable information from spoken conversations. By building these capabilities right in, a platform like Zemith lets marketing and sales teams stop guessing and start making decisions based on what their customers are actually saying.

Choosing the Right AI Transcription Tool

Now that we've unpacked what audio to text AI can do, it's time to talk about picking the right tool for the job. The market is full of options, and they all claim to be the best. But the reality is, the "best" tool is the one that fits your specific needs.

Making a smart choice means looking past the marketing hype. You need to focus on what really matters: performance, security, and whether it will actually slot into your daily work without causing more headaches than it solves. This is about finding a true partner, not just another piece of software.

Core Evaluation Criteria

When you start comparing different services, there are four things that are absolutely non-negotiable: accuracy, speed, security, and scalability. If a tool falls short in any one of these areas, what seems like a great deal can quickly become a major bottleneck for your team.

Start by digging into these fundamentals:

- Transcription Accuracy: How does it perform in the real world? Aim for services that promise accuracy rates above 90% for clear audio. Even better, run a few of your own files through a trial—especially ones with tricky industry jargon or diverse accents.

- Processing Speed: Time is money. A solid tool should turn around a transcript in minutes, not hours. The whole point is to get actionable information into your team's hands faster.

- Data Security: Your audio recordings can hold incredibly sensitive conversations. Make sure any provider you consider uses strong encryption and has a straightforward privacy policy. You need to know your data, and your clients' data, is safe.

- Scalability: A tool that works for you today should also work for you tomorrow. It needs to handle a growing number of files and users as your business expands, all without slowing down.

This is where a platform like Zemith really shines. It was built from the ground up to nail these core requirements, pairing high-accuracy models with security protocols that businesses can trust. That solid foundation means you get a reliable service right out of the box.

Beyond the Basics: Pricing and Integration

Once you’ve vetted the basics, it’s time to look at the bigger picture: cost and integration. A standalone transcription tool is fine, but its true power is unlocked when it works seamlessly with the other tools you already use.

Think about whether you need a simple API or an all-in-one platform. An API is perfect for developers who want to bake transcription into their own software. For most businesses, though, an integrated platform delivers much more value, much faster.

A comprehensive solution like Zemith eliminates the need to juggle multiple subscriptions. By bringing transcription, document analysis, and content creation into a single workspace, you not only save money but also create a much more efficient workflow for your team.

For a deeper dive into making this choice, you can read more about the different types of AI audio to text solutions and see how they map to different business needs.

Making a Practical and Powerful Choice

Ultimately, the best audio to text AI tool should feel like a natural part of your team. It has to be powerful enough for your most demanding tasks but intuitive enough that anyone can jump in and use it without weeks of training.

Here’s a final checklist to run through before you make a decision:

- User Experience: Is the interface clean and easy to navigate? Can a new hire get up to speed quickly?

- Feature Set: Does it have the essentials, like identifying different speakers or letting you add custom words?

- Support: If you get stuck, how easy is it to get help from a real person?

- Cost-Effectiveness: Does the price make sense for how much you'll use it?

By carefully considering these points, you can find a tool that delivers real value from day one and continues to pay for itself over the long haul. A solution like Zemith, which marries a user-friendly design with powerful, secure, and scalable performance, is a practical choice for any business ready to turn its audio into a real strategic asset.

Tapping into Your Audio Goldmine

So, we've walked through the what, how, and why of audio-to-text AI. It's clear that turning spoken words into structured, searchable data isn't just a neat trick anymore—it's a fundamental shift in how businesses can operate and learn.

Think about all the conversations that happen every single day. Customer calls, team meetings, brainstorming sessions—they're packed with insights that are just too important to let disappear into thin air. Every one of those conversations holds clues that can sharpen your strategy, refine your products, and show you exactly where the market is headed.

The moment a call ends or a meeting adjourns, that intelligence starts to fade. It's time to stop that loss and start building a permanent, searchable archive of your company's collective voice.

Taking that first step is easier than you might think. By exploring a platform like Zemith, you can see for yourself how your team's voice data can be turned into one of your most powerful assets. Integrating this into your daily workflow gives you a single source of truth that everyone in the organization can draw from.

Of course, once you have all this newly accessible information, you need a smart way to organize it. To get that part right, take a look at our guide on building effective knowledge management systems that put crucial insights right where your team can find them.

Common Questions About Audio-to-Text AI

Diving into the world of audio-to-text AI naturally brings up a few questions. Let's tackle some of the most common ones to give you a clearer picture of how this technology works in the real world.

Just How Accurate Is It, Really?

This is the big one. Under perfect lab conditions—think a single person speaking clearly into a high-quality mic with zero background noise—the best systems can hit accuracy rates over 95%.

But real life is messy. Accents, background chatter, people talking over each other, and specialized jargon can all knock that number down. That’s why platforms like Zemith focus on real-world performance, building in features like custom vocabularies to make sure the AI correctly understands and transcribes terms specific to your industry.

Is My Data Safe When I Upload It?

Data security should be at the top of your list. Any reputable transcription service will treat your information like gold, using strong security measures to protect it. The standard here is end-to-end encryption, which scrambles your data from the moment you upload it until you access the transcript.

Top-tier platforms like Zemith are built on secure cloud infrastructure and have strict privacy policies in place. Before you upload anything sensitive, always check a provider's security practices and make sure they meet data protection standards.

The security of your data is non-negotiable. A trustworthy audio-to-text AI partner will be transparent about their security practices, ensuring your confidential information is always protected from unauthorized access.

Can the AI Tell Who's Speaking?

Absolutely. This handy feature is called speaker diarization. It's how a sophisticated AI can listen to a recording with multiple people and tell their voices apart. The final transcript will then label the text with something like "Speaker 1" and "Speaker 2."

This is a game-changer for making sense of recordings with more than one participant, like:

- Team meetings

- Panel discussions

- Journalist interviews

- Customer support calls

With a platform like Zemith, you don’t just get a wall of text. You get a clean, organized conversation that’s easy to read and search. The AI does the tedious work of figuring out who said what, so you can jump straight to pulling out the important insights.

Ready to unlock the insights hidden in your audio files? With Zemith, you can transform conversations into searchable, actionable data. Stop letting valuable information fade away—start your journey with Zemith today and discover the power of a truly integrated AI workspace.

Explore Zemith Features

Introducing Zemith

The best tools in one place, so you can quickly leverage the best tools for your needs.

All in One AI Platform

Go beyond AI Chat, with Search, Notes, Image Generation, and more.

Cost Savings

Access latest AI models and tools at a fraction of the cost.

Get Sh*t Done

Speed up your work with productivity, work and creative assistants.

Constant Updates

Receive constant updates with new features and improvements to enhance your experience.

Features

Selection of Leading AI Models

Access multiple advanced AI models in one place - featuring Gemini-2.5 Pro, Claude 4.5 Sonnet, GPT 5, and more to tackle any tasks

Speed run your documents

Upload documents to your Zemith library and transform them with AI-powered chat, podcast generation, summaries, and more

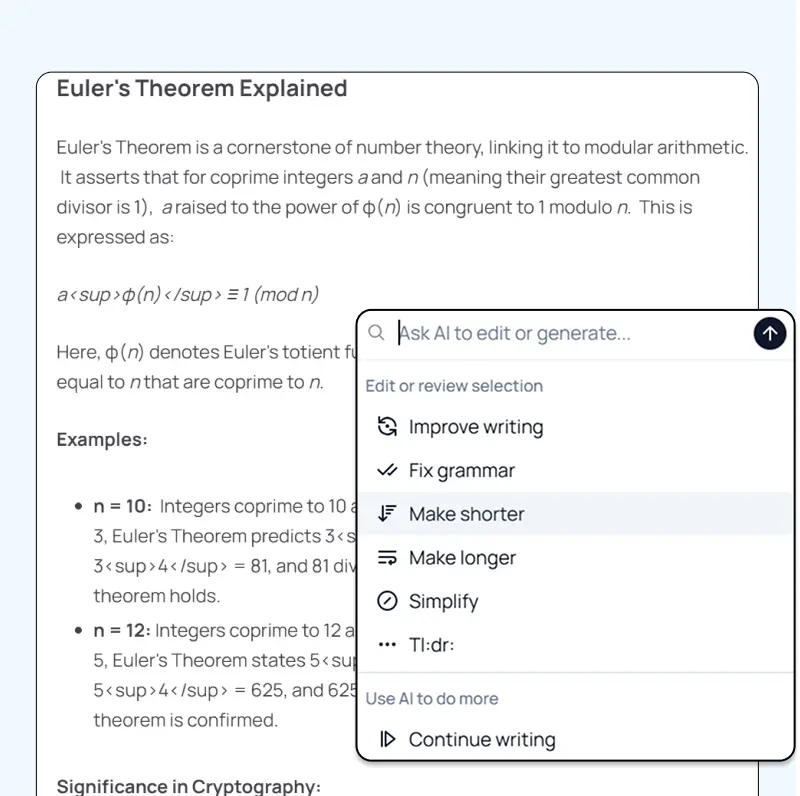

Transform Your Writing Process

Elevate your notes and documents with AI-powered assistance that helps you write faster, better, and with less effort

Unleash Your Visual Creativity

Transform ideas into stunning visuals with powerful AI image generation and editing tools that bring your creative vision to life

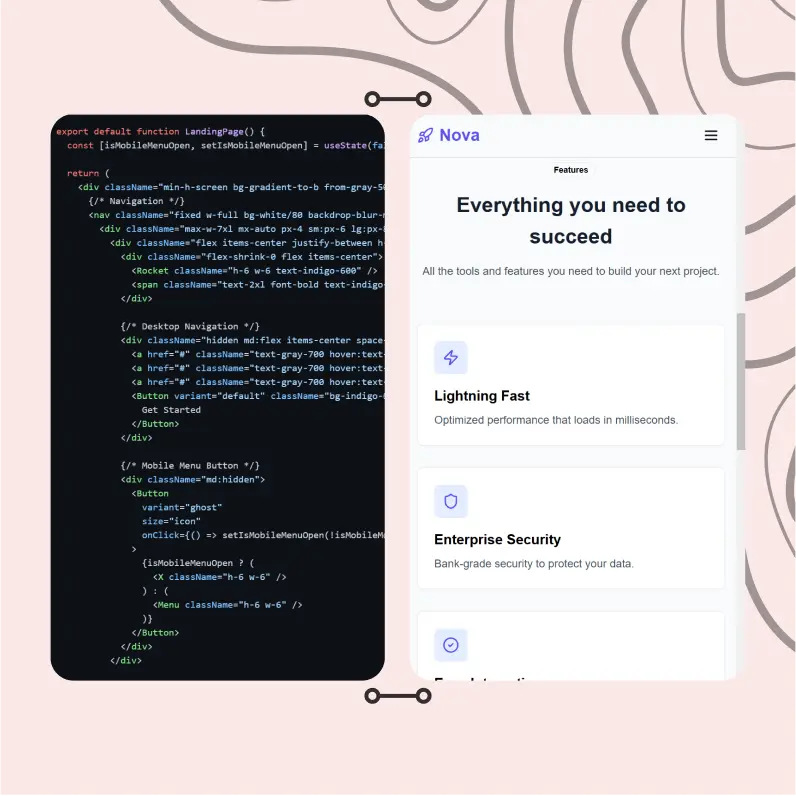

Accelerate Your Development Workflow

Boost productivity with an AI coding companion that helps you write, debug, and optimize code across multiple programming languages

Powerful Tools for Everyday Excellence

Streamline your workflow with our collection of specialized AI tools designed to solve common challenges and boost your productivity

Live Mode for Real Time Conversations

Speak naturally, share your screen and chat in realtime with AI

AI in your pocket

Experience the full power of Zemith AI platform wherever you go. Chat with AI, generate content, and boost your productivity from your mobile device.

Deeply Integrated with Top AI Models

Beyond basic AI chat - deeply integrated tools and productivity-focused OS for maximum efficiency

Straightforward, affordable pricing

Save hours of work and research

Affordable plan for power users

Plus

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

Professional

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

What Our Users Say

Great Tool after 2 months usage

simplyzubair

I love the way multiple tools they integrated in one platform. So far it is going in right dorection adding more tools.

Best in Kind!

barefootmedicine

This is another game-change. have used software that kind of offers similar features, but the quality of the data I'm getting back and the sheer speed of the responses is outstanding. I use this app ...

simply awesome

MarianZ

I just tried it - didnt wanna stay with it, because there is so much like that out there. But it convinced me, because: - the discord-channel is very response and fast - the number of models are quite...

A Surprisingly Comprehensive and Engaging Experience

bruno.battocletti

Zemith is not just another app; it's a surprisingly comprehensive platform that feels like a toolbox filled with unexpected delights. From the moment you launch it, you're greeted with a clean and int...

Great for Document Analysis

yerch82

Just works. Simple to use and great for working with documents and make summaries. Money well spend in my opinion.

Great AI site with lots of features and accessible llm's

sumore

what I find most useful in this site is the organization of the features. it's better that all the other site I have so far and even better than chatgpt themselves.

Excellent Tool

AlphaLeaf

Zemith claims to be an all-in-one platform, and after using it, I can confirm that it lives up to that claim. It not only has all the necessary functions, but the UI is also well-designed and very eas...

A well-rounded platform with solid LLMs, extra functionality

SlothMachine

Hey team Zemith! First off: I don't often write these reviews. I should do better, especially with tools that really put their heart and soul into their platform.

This is the best tool I've ever used. Updates are made almost daily, and the feedback process is very fast.

reu0691

This is the best AI tool I've used so far. Updates are made almost daily, and the feedback process is incredibly fast. Just looking at the changelogs, you can see how consistently the developers have ...